Troubleshooting Airflow Issues

This topic describes a couple of best practices and common issues with solutions related to Airflow.

Cleaning up Root Partition Space by Removing the Task Logs

You can set up a cron to cleanup root partition space filled by task log. Usually Airflow cluster runs for a longer time, so, it can generate piles of logs, which could create issues for the scheduled jobs. So, to clear the logs, you can set up a cron job by following these steps:

Edit the crontab:

sudo crontab -eAdd the following line at the end and save

0 0 * * * /bin/find $AIRFLOW_HOME/logs -type f -mtime +7 -exec rm -f {} \;

Using macros with Airflow

Macros on Airflow describes how to use macros.

Common Issues with Possible Solutions

Issue 1: When a DAG has X number of tasks but it has only Y number of running tasks

Check the DAG concurrency in airflow configuration file(ariflow.cfg).

Issue 2: When it is difficult to trigger one of the DAGs

Check the connection id used in task/Qubole operator. There could be an issue with the API token used in connection.To check the connection Id, Airflow Webserver -> Admin -> Connections. Check the datastore connection: sql_alchemy_conn in airflow configuration file(airflow.cfg) If there is no issue with the above two things. Create a ticket with Qubole

Issue 3: Tasks for a specific DAG get stuck

Check if the depends_on_past property is enabled in airflow.cfg file. Based on the property, you can choose to

do one of these appropriate solutions:

If

depends_on_pastis enabled, check the runtime of the last task that has run successfully or failed before the task gets stuck. If the runtime of the last successful or failed task is greater than the frequency of the DAG, then DAG/tasks are stuck for this reason. It is an open-source bug. Create a ticket with Qubole Support to clear the stuck task. Before creating a ticket, gather the information as mentioned in Troubleshooting Query Problems – Before You Contact Support.If

depends_on_pastis not enabled, create a ticket with Qubole Support. Before creating a ticket, gather the information as mentioned in Troubleshooting Query Problems – Before You Contact Support.

Issue 4: When manually running a DAG is impossible

If you are unable to manually run a DAG from the UI, do these steps:

Go to line 902 of the

/usr/lib/virtualenv/python27/lib/python2.7/site-packages/apache_airflow-1.9.0.dev0+incubating-py2.7.egg/airflow/www/views.pyfile.Change

from airflow.executors import CeleryExecutortofrom airflow.executors.celery_executor import CeleryExecutor.

Questions on Airflow Service Issues

Here is a list of FAQs that are related to Airflow service issues with corresponding solutions.

Which logs do I look up for Airflow cluster startup issues?

Refer to Airflow Services logs which are brought up during the cluster startup.

Where can I find Airflow Services logs?

Airflow services are Scheduler, Webserver, Celery, and RabbitMQ. The service logs are available at

/media/ephemeral0/logs/airflowlocation inside the cluster node. Since airflow is single node machine, logs are accessible on the same node. These logs are helpful in troubleshooting cluster bringup and scheduling issues.What is $AIRFLOW_HOME?

$AIRFLOW_HOMEis a location that contains all configuration files, DAGs, plugins, and task logs. It is an environment variable set to/usr/lib/airflowfor all machine users.Where can I find Airflow Configuration files?

Configuration file is present at “$AIRFLOW_HOME/airflow.cfg”.

Where can I find Airflow DAGs?

The DAGs’ configuration file is available in the

$AIRFLOW_HOME/dagsfolder.Where can I find Airflow task logs?

The task log configuration file is available in

$AIRFLOW_HOME/logs.Where can I find Airflow plugins?

The configuration file is available in

$AIRFLOW_HOME/plugins.How do I restart Airflow Services?

You can do start/stop/restart actions on an Airflow service and the commands used for each service are given below:

Run

sudo monit <action> schedulerfor Airflow Scheduler.Run

sudo monit <action> webserverfor Airflow Webserver.Run

sudo monit <action> workerfor Celery workers. A stop operation gracefully shuts down existing workers. A start operation adds more equivalent number of workers as per the configuration. A restart operation gracefully shuts down existing workers and adds equivalent number of workers as per the configuration.Run

sudo monit <action> rabbitmqfor RabbitMQ.

How do I invoke Airflow CLI commands within the node?

Airflow is installed inside a virtual environment at the

/usr/lib/virtualenv/python27location. Firstly, activate the virtual envirnoment,source /usr/lib/virtualenv/python27/bin/activateand run the Airflow command.How to view the Airflow processes using Monit dashboard?

You can navigate to the Clusters page and select Monit Dashboard from the Resources drop-down list of an up and running cluster. To know more about how to use Monit dashboard, see Monitoring through Monit Dashboard.

How to manage the Airflow processes using Monit Dashboard when the status is Failed or Does not exist?

If the status of the process is Execution failed or Does not exist, you need to restart the process. To know more about about how to restart the process with the help of Monit dashboard, see Monitoring through Monit Dashboard.

Questions on DAGs

How do I delete a DAG?

Deleting a DAG is still not very intuitive in Airflow. QDS provides its own implementation for deleting DAGs, but you must be careful using it.

To delete a DAG, submit the following command from the Analyze page of the QDS UI:

airflow delete_dag dag_id -f

The above command deletes the DAG Python code along with its history from the data source. Two types of errors may occur when you delete a DAG:

DAG isn't available in Dagbag:This happens when the DAG Python code is not found on the cluster’s DAG location. In that case, nothing can be done from the UI and it would need a manual inspection.

Active DAG runs:If there are active DAG runs pending for the DAG, then QDS cannot delete it. In such a case, you can visit the DAG and mark all tasks under those DAG runs as completed and try again.

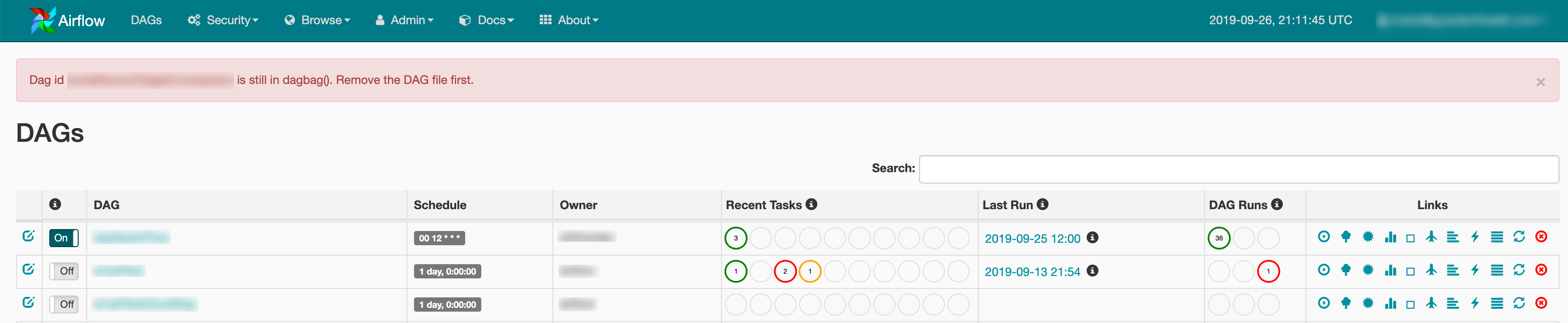

Error message when deleting a DAG from the UI

The following error message might appear when you delete a DAG from the QDS UI in Airflow v1.10.x:

<dag Id> is still in dagbag(). Remove the DAG file first.

Here is how the error message appears in the Airflow UI:

The reason for this error message is that deleting a DAG from the UI causes the metadata of the DAG to be deleted, but not the DAG file itself. In Airflow 1.10.x, the DAG file must be removed manually before deleting the DAG from the UI. To remove the DAG file, perform the following steps:

ssh into the Airflow cluster.

Go to the following path:

/usr/lib/airflow/dagsRun the following command:

grep -R "<dag_name_you_want_to_delete>". This command will return the file path linked to this DAG.Delete the DAG file using the following command:

rm <file_name>With the DAG file removed, you can now delete the DAG from the QDS UI.

If you still face issues with deleting a DAG, raise a ticket with Qubole Support.

Can I create a configuration to externally trigger an Airflow DAG?

No, but you can trigger DAGs from the QDS UI using the shell command airflow trigger_dag <DAG>....

If there is no connection password, the qubole_example_operator DAG will fail when it is triggered.