Configuring the ODBC Driver

You must change the connection settings from the Windows ODBC administrator.

Note

In JDBC and ODBC driver configurations, https://api.qubole.com is the default endpoint.

The following subtopics cover the configuration-related information:

Prerequisites

Ensure that you have the access to the object/resource that you refer to in the driver configuration. If you see an access denied error, check with the Qubole account administrator. For more information, see Resources, Actions, and What they Mean.

Note

Turning off Bypass QDS enables the legacy mode.

Configuring the ODBC Driver DSN

Perform these steps from the Windows Control Panel:

Navigate to the ODBC administrator from the Windows Control Panel (the path varies depending on the Windows version, as described in Verifying the Driver Version.

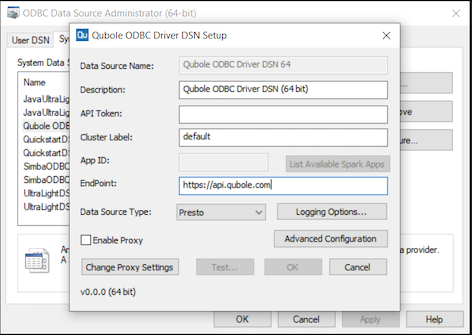

Go to System DSN, select Qubole ODBC Driver DSN 64 and click Configure. The Qubole ODBC Driver DSN Setup dialog box is displayed. (The version number is the latest driver version)

Enter API Token, Cluster Label, Endpoint and choose the appropriate DSN name. (These are mandatory parameters and App ID is mandatory for Spark in the legacy mode) Managing Your Accounts provides more information on the API tokens.

The Data Source Type must be presto when the driver is in the QDS Bypass mode (which is the default mode).

Click OK.

Note

For more information on how to set the bucket region, catalog name, and to get results directly from S3, see Setting Additional Configuration.

Setting Additional Configuration

The advanced driver configuration is described in the following section. Listing and Creating Spark Apps describes how to create and list the Spark Apps when the driver is in the legacy mode.

Note

Turning off Bypass QDS enables the legacy mode.

To set the advanced configuration, perform these steps:

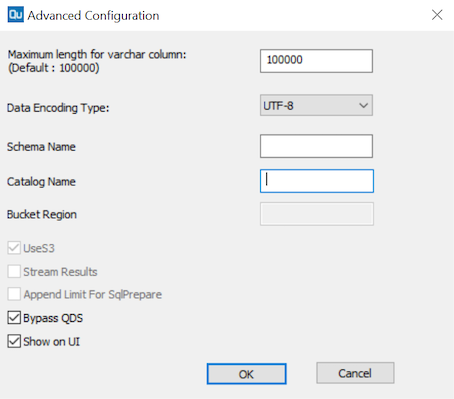

On Qubole ODBC Driver DSN Setup, click Advanced Configuration. The following dialog is displayed.

You can change the following properties under Advanced Configuration:

Maximum length of varchar column: If you do not want to use the default value, which is 100000.

However, some tools require the maximum value to be set below a particular limit.

Example: For Microsoft SQL Server, this value has to be set to 8000 or less than 8000.

Data Encoding type: You can choose the encoding type of the data as

UTF-8orWindows-1252. The default type isUTF-8.Schema Name: Enter the schema name to filter to that schema while fetching metadata so that only the related

TABL_SCHEMconfigured is exposed.Catalog Name: Specify the data catalog name. The driver only supports Presto in its QDS Bypass mode but it supports Hive, Presto, and Spark when it is in the legacy mode.

UseS3: It is selected by default and it is set to bypass the QDS Control Plane for getting results directly from the S3 location. The driver only supports this option in the legacy mode.

Note

To enable legacy mode, deselect/turn off the Bypass QDS option.

Bucket Region: Specify the AWS region in which you have the S3 object storage for final results (default storage location (defloc)), which you use to read data from or write data into it. If you do not specify the AWS region, then it defaults to

us-east-1AWS region. The driver only supports this option in the legacy mode.Stream Results: Select it to enable Presto FastStreaming, which enables streaming of results directly from AWS S3 in the ODBC driver. This is in contrast to the earlier behavior wherein the driver waits for the query to finish before downloading any results from the QDS Control Plane or from S3. The streaming behavior can help the BI tool performance as results are displayed as soon as they are available in S3. Presto FastStreaming for ODBC driver is supported in Presto versions 0.208 and later. The driver only supports this option in the legacy mode.

As streaming cannot be used with Presto Smart Query Retry, the Presto FastStreaming feature automatically disables Presto Smart Query Retry.

Note

Create a ticket with Qubole Support to enable the Presto FastStreaming feature on the account.

Append Limit for SqlPrepare: This configuration is now deprecated.

Bypass QDS: This property is only applicable to the third-generation ODBC driver’s QDS Bypass mode.

Note

Currently, this property only applies to AWS. Do not select this option for the Azure cloud endpoint, azure.qubole.com.

By default, this property is set to

trueto allow the driver to directly communicate with Presto master for submitting commands and fetching results. If you do not set this property, then the driver behaves as the ODBC driver in the legacy mode.Show on UI: This property is only applicable to the third-generation ODBC driver’s QDS Bypass mode. By default, this property is set to

trueto display the queries on Qubole’s UI. It returnsQueryHistIDas part of the error message for queries executed through the third-generation ODBC driver.Click OK.

Modes of ODBC Driver Version 3.0 for Windows

The third-generation ODBC driver supports two modes:

Legacy mode. It is when Bypass QDS is turned off/deselected. The driver behaves just like its previous versions 2.1.1.

QDS Bypass mode. It is when Bypass QDS is enabled. It is the default behavior of the third-generation ODBC driver.

The following table shows the supported/unsupported properties of the ODBC driver version 3.0 in the QDS-Bypass mode and the previous version 2.1.1.

Property Name |

Version 2.1.1/Legacy mode in version 3 |

QDS-Bypass Mode in version 3 |

|---|---|---|

API Token |

|

|

Cluster Label |

|

|

EndPoint |

|

|

Data Source Type |

|

|

App ID |

|

|

Catalog Name |

|

|

Schema Name |

|

|

Bucket Region |

|

|

Use S3 |

|

|

Stream Results |

|

|

Bypass QDS (v3) |

|

|

Show on UI (v3) |

|

|

Listing and Creating Spark Apps

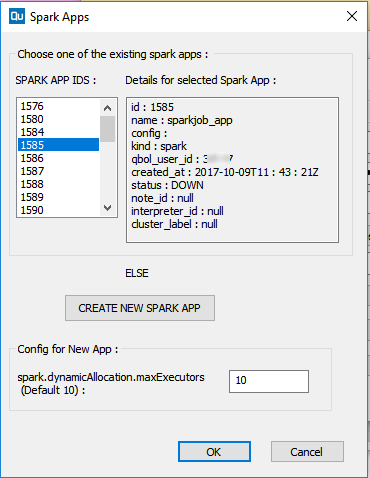

You can get the list of Spark Apps available with the entered API token and endpoint. Spark is supported when the ODBC driver is in the legacy mode. To enable the legacy mode, deselect/turn off Bypass QDS.

On Qubole ODBC Driver DSN Setup, choose Spark as Data Source Type. Ensure that the correct API token and endpoint have been entered. Click List Available Spark Apps. The Spark Apps dialog box is displayed.

Select one of the listed apps represented by the App ID or click CREATE NEW SPARK APP to create a new spark app.

For the new app, you can change the configuration value of

spark.dynamicAllocation.maxExecutors. Its default value is 10.