7. Why is my cluster scaling beyond the configured maximum number of nodes?

When you use multiple worker node types to configure a heterogeneous cluster, autoscaling can cause the actual number of nodes running in the cluster to exceed the configured Maximum Worker Nodes. This is because the goal of autoscaling is to ensure that the cluster’s overall capacity meets (but does not exceed) the needs of the current workload. In a homogeneous cluster, in which worker nodes are of only one instance type, capacity is simply the result of the number of nodes times the memory and cores of the configured instance type. But in a heterogeneous cluster, a given capacity can be achieved by more than one mix of instance types, including some mixes in which the total number of nodes exceeds the configured Maximum Worker Nodes. But the cluster will never exceed the configured maximum capacity, which QDS computes from the capacity of the primary instance type times the worker-node maximum you configure.

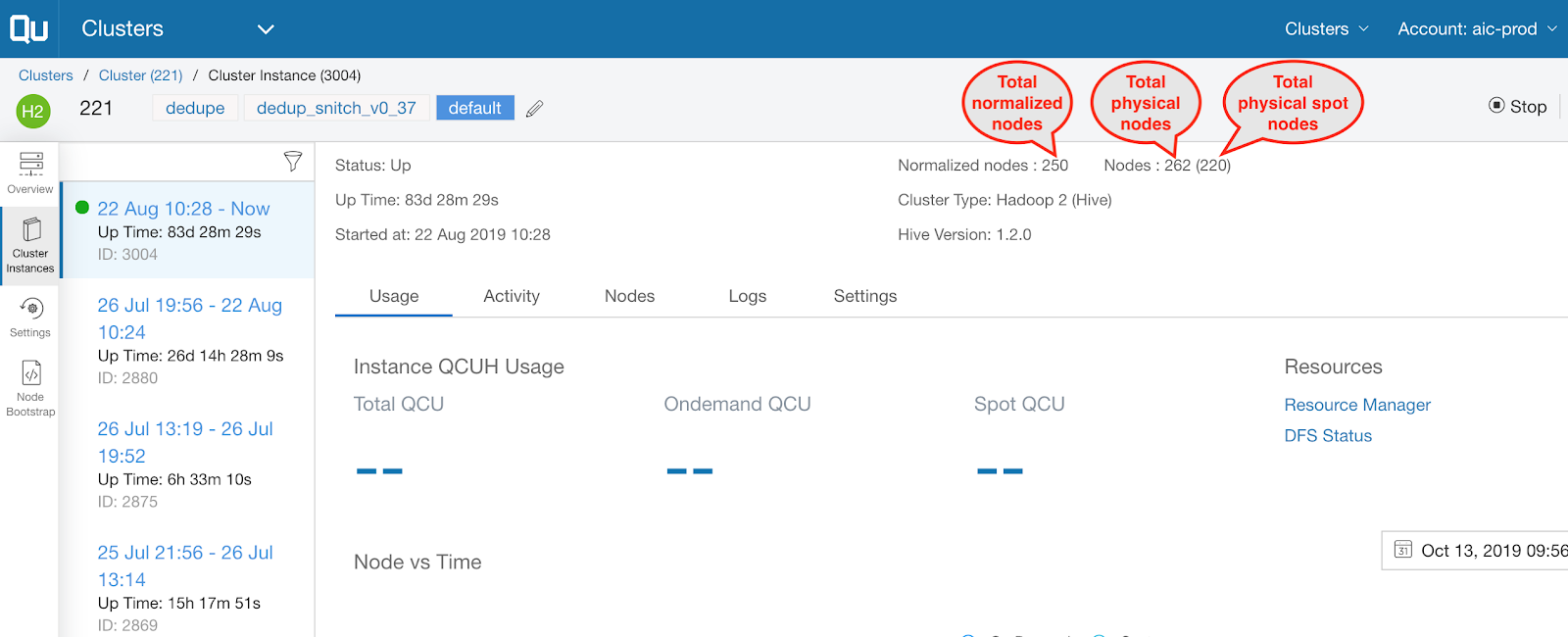

The QDS UI uses the term normalized nodes to show the number of nodes that would be running if they were all of the primary instance type. The number of normalized nodes running will never exceed the configured Maximum Worker Nodes.

For example, in an AWS cluster:

7.1. Upscaling Example (AWS)

Note

This example uses AWS instance types, but similar calculations are done for any supported Cloud.

Assume your cluster has the following heterogeneous configuration:

Primary Worker Instance Type - r4.2xlarge

Secondary Worker Instance Types - r4.xlarge and r4.4xlarge

An r4.xlarge instance has 4 cores and 30.5 GB memory with an EC2 cost of $0.5x.

An r4.2xlarge instance has 8 cores and 61 GB memory with an EC2 cost of $1x.

An r4.4xlarge instance has 16 cores and 122 GB memory with an EC2 cost of $2x.

Suppose workload requirements require the cluster to upscale by 100 nodes of the primary worker instance type (r4.2xlarge). This means that the cluster needs additional capacity equivalent to 100 x r4.2xlarge (i.e: 800 cores and 6.1 TB memory).

Given the cluster configuraton described in the previous bullet, QDS will meet this need in one of following three ways:

200 r4.xlarge instances; or

100 r4.2xlarge instances; or

50 r4.4xlarge instances.

All three of the above options will add very similar capacity (in this example, 800 cores and 6.1TB memory) and cost you more or less the same (in this example, $100x). All three amount to the equivalent of adding 100 nodes of the primary instance type (r4.2xlarge).