Compose a Hadoop Job

Use the command composer on Workbench to compose the following types of Hadoop jobs:

Note

Before running a Hadoop job, ensure a new output directory exists.

Hadoop and Presto clusters support running Hadoop jobs. See Mapping of Cluster and Command Types for more information. See running-hadoop-job for an example.

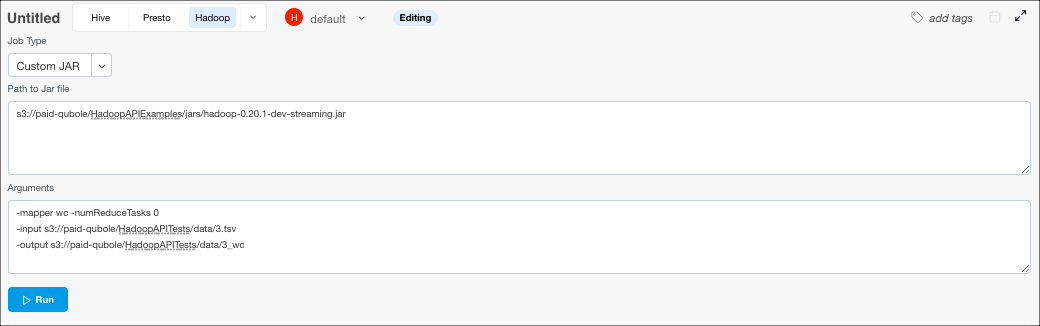

Compose a Hadoop Custom Jar Query

Perform the following steps to compose a Hadoop jar query:

Navigate to Workbench and click + New Collection.

Select Hadoop from the command type drop-down list.

Choose the cluster on which you want to run the query. View the health metrics of a cluster before you decide to use it.

Custom JAR is selected by default in the Job Type drop-down list.

In the Path to Jar file field, specify the path of the directory that contains the Hadoop jar file.

In the Arguments text field, specify the main class, generic options, and other JAR arguments.

Click Run to execute the query.

Monitor the progress of your job using the Status and Logs panes. You can toggle between the two using a switch. The Status tab also displays useful debugging information if the query does not succeed. For more information on how to download command results and logs, see Get Results.

For information on the REST API, see Submitting a Hadoop Jar Command.

Compose a Hadoop Streaming Query

Perform the following steps to compose a Hadoop streaming query:

Navigate to Workbench and click + New Collection.

Select Hadoop from the command type drop-down list.

Choose the cluster on which you want to run the query. View the health metrics of a cluster before you decide to use it.

Select streaming from the Job Type drop-down list.

In the Arguments text field, specify the streaming and generic options.

Click Run to execute the query.

Monitor the progress of your job using the Status and Logs panes. You can toggle between the two using a switch. The Status tab also displays useful debugging information if the query does not succeed. For more information on how to download command results and logs, see Get Results.

Compose a Hadoop DistCp Command

Perform the following steps to compose a Hadoop DistCp command:

Navigate to Workbench and click + New Collection.

Select Hadoop from the command type drop-down list.

Choose the cluster on which you want to run the query. View the health metrics of a cluster before you decide to use it.

From the Job Type drop-down list, select s3distcp for AWS, or clouddistcp for Azure, GCP, or Oracle.

In the Arguments text field, specify the generic and DistCp options.

Click Run to execute the query.

Monitor the progress of your job using the Status and Logs panes. You can toggle between the two using a switch. The Status tab also displays useful debugging information if the query does not succeed. For more information on how to download command results and logs, see Get Results.