Managing Clusters¶

QDS pre-configures a set of clusters to run the jobs and queries you initiate from the Analyze page.

Note

Qubole supports options to control the query runtime, which are described in Configuring Query Runtime Settings.

You can use the QDS UI to modify these clusters, and create and change new ones. This page provides detailed instructions.

QDS starts clusters when they are needed and resizes them according to the query workload.

Note

- By default, QDS shuts down a cluster down after two hours of inactivity (that is, when no command has been running during the past two hours). This default is configurable; set Idle Cluster Timeout as explained below.

- A cluster lifespan depends on a session in a user account; the cluster runs as long as there is an active cluster session. By default, a session stays alive for two hours of inactivity and can run for any amount of time as long as commands are running. Use the Sessions tab in Control Panel to create new sessions, terminate sessions, or extend sessions. For more information, see Managing Sessions.

Other Useful Pages¶

- For topics of interest to non-administrator users, Qubole Clusters.

- For instructions on designating a default cluster, Setting Cluster as Default.

- For an overview of the Clusters page in the QDS UI, Using the Cluster User Interface.

- For a summary of actions you can perform on a cluster (start, stop, etc.), Cluster Operations.

To Get Started¶

- To see what clusters are active, and for information about each cluster, navigate to the Clusters page.

- To add a new cluster, click New on the Clusters page; to modify an existing cluster, click the Edit button next to that that cluster.

Now choose one of the following sections, depending on your Cloud provider:

Note

To supplement the information on this page, you can click on the  in the UI for help on a field or check box.

in the UI for help on a field or check box.

Adding and Modifying the Cluster Configuration (AWS)¶

Adding or Changing Settings (AWS)¶

You can add and modify settings as follows:

Type - Select the type of cluster. Click the Customize drop down and select your Image Preferences under Generation and under Release. Once both are selected, they cannot be changed or modified later. This field is applicable to a new cluster that you create.

Configuration. See Modifying Cluster Settings.

Composition. See Modifying Cluster Composition.

Advanced Configuration:

- EC2 SETTINGS. See Modifying EC2 Settings.

- HADOOP CLUSTER SETTINGS. See Modifying Hadoop Cluster Settings.

- HIVE SETTINGS. Available only for the Hadoop 2 (Hive) clusters. See Configuring a HiveServer2 Cluster for more information.

- MONITORING. See Advanced configuration: Modifying Cluster Monitoring Settings.

- SECURITY SETTINGS. See Modifying Security Settings.

You can also define account-level default cluster tags. For more information, see Adding Account and User level Default Cluster Tags (AWS).

Cluster Configuration Settings (AWS)¶

Under the Configuration tab, you can add or modify:

Cluster Labels: A cluster can have one or more labels separated by a comma. You can set/label a cluster as default by selecting the default option. At a given point in time, only one cluster can be set as the default cluster in an account.

Note

You can reassign a cluster’s label to another cluster by dragging it and dropping it against the cluster to which you want to assign in the main Clusters UI page. Ensure that the clusters are not running as a running cluster may have active queries running on them. Reassign a Cluster Label describes how to reassign a cluster label through the API.

Coordinator Node Type: Set the Coordinator node type by selecting the preferred node type from the drop-down list.

Worker Node Type: Set the worker node type by selecting the preferred node type from the drop-down list. However, if you select the c3, c4, c5, m3, m4, m5, p2, r3, r4, and x1 worker node type, you get three additional Elastic Block Storage (EBS) configurations, that is EBS Volume Count, EBS Volume Type (Magnetic is the default EBS volume type), and EBS Volume Size (supported range is 100/500 GB - 16 TB). You can also enable EBS Upscaling on Spark and Hadoop 2 Clusters as described in Configuring EBS Upscaling in AWS Hadoop and Spark Clusters.

Note

You can launch the c4, c5, i3, m4, m5, h1, p2, r4, and x1 instance types only in a VPC. The r4 instances are supported only on

ap-south-1,eu-west-1,us-east-1,us-east-2,us-west-1, andus-west-2regions. The h1 instances are supported only onus-east-1,us-east-2,us-west-2, andeu-west-1regions.The EBS volume types that are supported with the EBS volume size are:

- For

standard(Magnetic) EBS volume type, the supported value range is 100 GB - 1 TB.- For

ssd/gp2(General Purpose SSD) EBS volume type, the supported value range is 100 GB - 16 GB.- For

st1(Throughput Optimized HDD) andsc1(Cold HDD), the supported value range is 500 GB - 16 TB.Note

For recommendations on using EBS volumes, see AWS EBS Volumes.

Use Multiple Worker Node Types - See Configuring Heterogeneous Worker Nodes in the QDS UI for UI support in Hadoop 2 and Spark clusters.

Minimum Worker Nodes: Set the minimum number of worker nodes if you want to change it from the default 2.

Maximum Worker Nodes: Set the maximum number of worker nodes if you want to change it from the default 2.

Region - Denotes the region from which the nodes are launched. The default region is us-east-1. Select a region from the drop-down list to change it.

Availability Zone - By default, the AWS Availability Zone (AZ) is set to No Preference and Qubole lets AWS select an AZ while starting the cluster. Note that in any given condition, all worker nodes are in the same AZ. You can select an AZ from the drop-down list, in which instances are reserved to benefit from the AWS reserved instances. Click the refresh icon

to refresh the availability zones’ list. However, if the cluster is in a VPC, then you cannot set the AZ.

Note

Qubole provides a feature to select an AWS AZ based on the optimal Spot price in a hybrid cluster (a cluster with On-Demand and Spot nodes). This feature is available for beta access. Create a ticket with Qubole Support to enable this feature for the account. For more information, see Understanding the Cost Optimization in AWS EC2-Classic Clusters.

Node Bootstrap File: Set the node bootstrap file.

Disable Automatic Cluster Termination is off by default (that is, clusters are automatically terminated unless you check this box). See Shutting Down an Idle Cluster.

Idle Cluster Timeout - Qubole allows you to configure the idle cluster time out in Hours and Hours and minutes. The two options are described below:

Hours: The default cluster timeout is 2 hours. Optionally, you can configure it between 0 to 6 hours; that is, the value range is 0-6 hours. The only unit of time supported is hours. If the timeout is set at the account level, it applies to all clusters within that account. However, you can override the timeout at the cluster level. The timeout is effective on the completion of all queries on the cluster. Qubole terminates a cluster on an hour boundary. For example, when

idle_cluster_timeoutis 0, then if there is any node in the cluster near its hour boundary (that is it has been running for 50-60 minutes and is idle even after all queries are executed), Qubole terminates that cluster. This setting is not applicable for Airflow clusters as they do not automatically terminate.Hours and minutes: The Idle Cluster Timeout can be configured in hours and minutes. This feature is not enabled on all accounts by default. For more information, see Understanding Aggressive Downscaling in Clusters (AWS). Contact the account team to enable this feature on the QDS account.

After the feature is enabled, you can configure the timeout in hours and minutes. Understanding the Changes in the Cluster UI describes the cluster timeout in hours and minutes.

Node Bootstrap File: Set the node bootstrap file.

Other Settings:

- Enable Ganglia Monitoring is enabled automatically if you use Datadog; otherwise it is disabled by default.

Cluster Basics and Configuring Clusters provide more information on the cluster and its configuration options.

How to Push Configuration to a Cluster provides more information on pushing certain configuration to a running cluster.

Click Next to update the cluster composition. Click Previous to go back to the previous tab. Click Cancel to undo your changes and restore the previous settings.

From the right pane (Summary) you can:

- Review and edit your changes.

- Click Create to create a new cluster.

- Click Update to change an existing cluster’s configuration.

Cluster Composition Settings (AWS)¶

Under the Cluster Composition tab, you can add or modify:

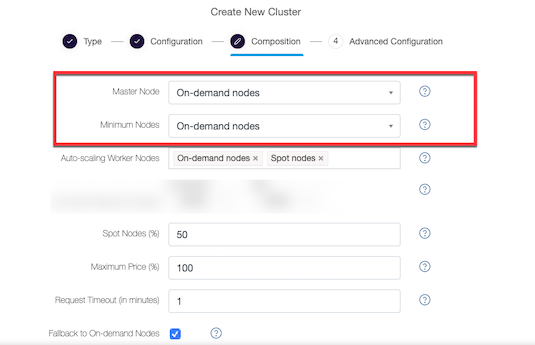

Coordinator Node - By default the value is On-Demand nodes, which are stable and are not lost because the bid price is fixed. Select this option to avoid risk of losing nodes if Spot instances are unavailable or are terminated.

Minimum Nodes - By default the value is On-Demand nodes, which are stable and are not lost because the bid price is fixed. Select this option to avoid risk of losing nodes if Spot instances are unavailable or are terminated.

The separated Coordinator Node and Minimum Nodes are as illustrated in this figure.

The other options are:

Spot Block - QDS supports configuring Spot Block instances on the new cluster UI page and also through cluster API calls. Spot Blocks are Spot instances that run continuously for a finite duration (1 to 6 hours). They are 30 to 45 percent cheaper than On-Demand instances based on the requested duration. They are stable than spot nodes as they are not susceptible to being taken away for the specified duration. However, these nodes certainly get terminated once the duration for which they are requested for is completed. For more details, see AWS spot blocks.

QDS ensures that Spot blocks are acquired at a price lower than On-Demand nodes. It also ensures that autoscaled nodes are acquired for the remaining duration of the cluster. For example, if the duration of a Spot block cluster is 5 hours and there is a need to autoscale after the second hour, Spot blocks are acquired for 3 hours.

Once you select Spot block, an additional text box is displayed to configure the duration. The default value is 2 hours. The supported value range is 1-6 hours.

Autoscaling Worker Nodes type. The default is Spot nodes. See AWS Settings for more information. The other options are:

On-Demand nodes

Spot Block. You can set this for worker nodes even if the Coordinator and minimum worker nodes are On-Demand nodes or Spot Blocks. You can use these nodes for worker nodes if Coordinator or minimum worker nodes are On-Demand/Spot block. In case when Coordinator and minimum worker nodes are On-Demand nodes and Spot block is selected for autoscaled nodes, the Spot block rotation is enabled in which Spot-block nodes are rotated periodically. To use such a configuration, create a ticket with Qubole Support to enable the corresponding feature. For more information, see Configuring Spot Blocks.

Once you choose the desired configuration, the spot block rotation gets enabled by default. So, choosing Coordinator and minimum worker nodes as On-Demand plus (autoscaled nodes as a combination of Spot block and Spot) results in the Spot block rotation getting enabled.

The UI directs you not to set 100% spot nodes but select other node type. The UI does not allow you to set Spot blocks as autoscaling nodes before choosing Spot nodes, when the Coordinator and minimum number of worker nodes are On-Demand.

Enable Fallback to on demand to avoid the risk of data loss as spot instance bid price is volatile.

Set Maximum Price Percentage in percentage if you want to change the default 100%.

Set Request timeout if you want to change the default 1 minute (in new clusters). The default for existing clusters is 10 minutes. Qubole recommends configuring lower Spot request timeout of 1 minute in existing clusters.

Qubole has added a new option for Spot request timeout called

Qubole Autotuned, which is the new default timeout. It lets Qubole to decide the timeout at the runtime on behalf of you. This optimizes the spot fulfillment and minimizes spot losses. It is the new default only on new clusters. It is part of Gradual Rollout.Set Spot Nodes(%) if you want to change the default 50%.

This section provides more information about cluster composition.

From the right pane (Summary) you can:

- Review and edit your changes.

- Click Create to create a new cluster.

- Click Update to change an existing cluster’s configuration.

After updating the cluster composition, click Next to continue to Advanced Configuration.

Advanced Configuration Settings (AWS)¶

Under the Advanced Configuration tab, you can add or modify:

- EC2 SETTINGS. See Modifying EC2 Settings.

- HADOOP CLUSTER SETTINGS. See Modifying Hadoop Cluster Settings.

- HIVE SETTINGS. Available for Hadoop 2 (Hive) clusters. See Configuring a HiveServer2 Cluster for more information.

- MONITORING. See Advanced configuration: Modifying Cluster Monitoring Settings.

- SECURITY SETTINGS. See Modifying Security Settings.

Note

The cluster options described are as organized in the new Clusters UI page. Since the new UI is not globally available yet, create a ticket with Qubole Support if you would like to enable it for the account.

Advanced configuration: Modifying EC2 Settings (AWS)¶

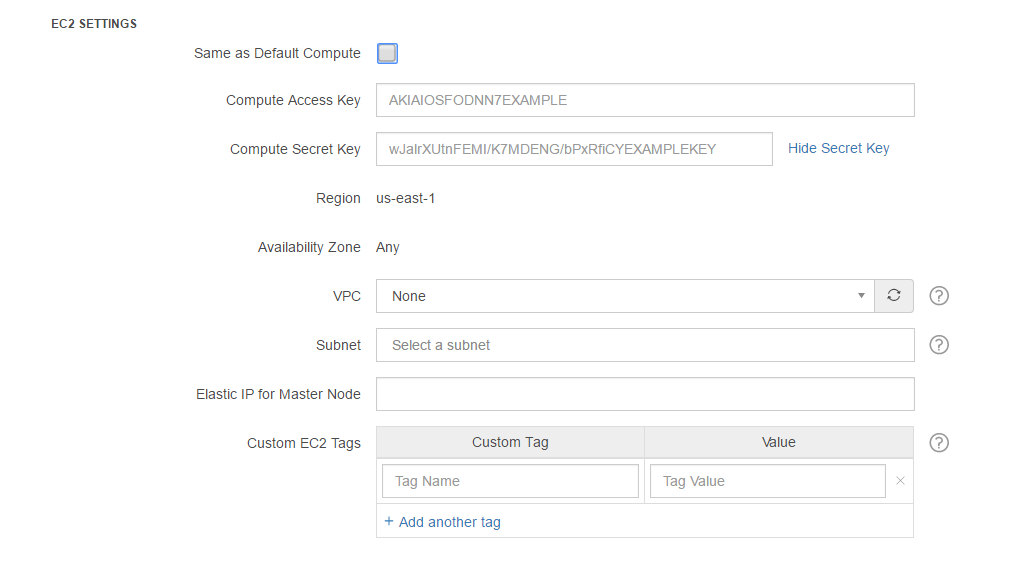

Amazon Elastic Cloud Compute (EC2) web service offers resizable compute capacity in the cloud. EC2 settings’ fields are visible only when the Compute Type is managed by you and not Qubole. The following figure shows an example of EC2 settings.

Note

If IAM Roles is set as the access mode type in account settings. Access and Secret Keys are not displayed in EC2 SETTINGS. Instead, the configured Role ARN and External ID text fields are displayed in EC2 SETTINGS (The two fields can be modified in Control Panel > Account Settings. Changing the two fields changes compute access and storage access for all clusters of the specific account).

EC2 SETTINGS contains the following options:

Same As Default Compute - Select this check box if you want the default compute settings for the cluster.

Note

Once you select Same as Default Compute, the Compute Access Key and Compute Secret Key text fields are not displayed.

Compute Access Key - Enter an access key (along with the secret key) to set AWS Credentials for launching the cluster.

Compute Secret Key - Enter a secret key (along with the access key) to set AWS Credentials for launching the cluster. Click Edit Secret Key to add a different key. An empty text field is displayed. Add a different secret key and click Hide Secret Key.

VPC - Set this configuration to launch the cluster within an Amazon Virtual Private Cloud (VPC). It is an optional configuration. If VPC is not specified, cluster nodes are placed in a Qubole created Security Group in EC2-Classic or in the default VPC for EC2-VPC accounts. After you select a VPC, the Bastion Host text field shows up and it is hidden when no VPC is selected. By default, None is selected. Click the refresh icon

to refresh the VPC list.

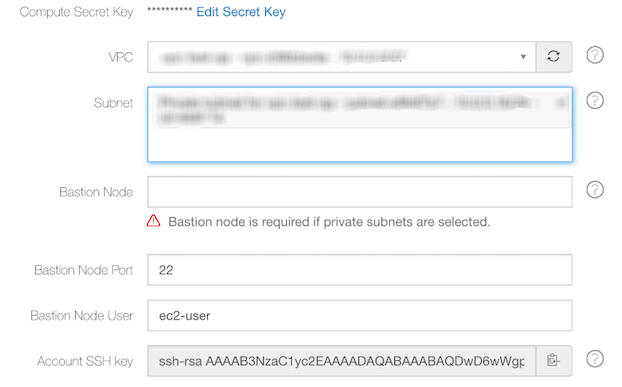

Subnet - When you select a VPC, a corresponding subnet gets selected. By default, the text field is blank as no VPC is selected. You can select a private subnet ID or a public subnet ID for the corresponding VPC.

You can select multiple private subnet IDs or public subnet IDs (if configured) for the corresponding VPC in a hybrid cluster. (A hybrid cluster contains on-demand core/minimum-number worker nodes and spot autoscaled nodes.) Public and private subnets that you select must be from different AWS AZ. For more information on how Qubole helps in optimizing AWS AZ cost based on the Spot price, see Cost Optimization and Ranking of Subnets in Multi-subnet Clusters (AWS).

Bastion Node - You can see this text field after you select a VPC. Specify the Bastion host DNS in the text field. You must specify a Bastion host DNS for a private subnet and this text field is not used for public subnets.

Bastion Node Port - The default port is 22. You can specify a non-default port.

Bastion Node User - It has the default user specified. You can specify a non-default user if required.

Account SSH Key - You can see this text field after you select a VPC and a private subnet for that VPC. This field displays the public key of SSH key pair that you should use while logging in to bastion node for a private subnet.

The following illustration shows a sample account SSH key in the EC2 settings.

Use Dedicated Instances - You can see this text field after you select a VPC. Enable it if you want the Coordinator and worker nodes to be dedicated instances. The dedicated instance would mean the instances launched do not share physical hardware with any other instances outside of the respective AWS account.

Elastic IP for Coordinator Node - It is the Elastic IP address for attaching to the cluster Coordinator. For more information, see this documentation.

Custom EC2 Tags - You can set a custom EC2 tag if you want the instances of a cluster and EBS volumes attached to these instances to get that tag on AWS. This tag is useful in billing across teams as you get the AWS cost per tag, which helps to calculate the AWS costs of different teams in a company.

Add a tag in Custom Tag and enter a value for the tag in the corresponding text field as shown in the above figure. Tags and values must have alphanumeric characters and can contain only these special characters: + (plus-sign), . (full stop/period/dot), - (hyphen), @ (at-the-rate of symbol), and _ (an underscore). The tags, Qubole, and alias are reserved for use by Qubole (see Qubole Cluster EC2 Tags (AWS)). Tags beginning with aws- are reserved for use by Amazon. Click Add EC2 tags if you want to add more than one custom tag. A maximum of five custom tags can be added per cluster. These tags are applied to the Qubole-created security groups (if any).

Qubole now supports account level and user level default cluster tags, which is a feature enabled on request. For more information, see Adding Account and User level Default Cluster Tags (AWS).

AWS EC2 Settings provides more information. See Manage Roles for information on how permissions to access features are granted and restricted.

Advanced Configuration: Modifying Hadoop Cluster Settings¶

Under HADOOP CLUSTER SETTINGS, you can:

- Specify the Default Fair Scheduler Queue if the queue is not submitted during job submission.

- Override Hadoop Configuration Variables for the Worker Node Type specified in the Cluster Settings section. The settings shown in the Recommended Configuration field are used unless you override them.

- Set Fair Scheduler Configuration values to override the default values.

Hadoop-specific Options provides more description about the options.

For an Azure cluster running Hadoop, you can use the Override Hadoop Configuration Variables field to configure the cluster to use more than one Azure storage account; see Configuring an Azure Cluster to Use Additional Storage Accounts.

Note

Recommissioning can be enabled on Hadoop clusters as an Override Hadoop Configuration Variable. See Enable Recommissioning for more information.

See Enabling Container Packing in Hadoop 2 and Spark for more information on how to more effectively downscale in an Hadoop 2 cluster.

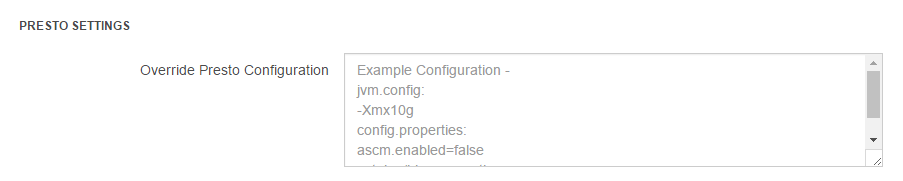

If the cluster type is Presto, you must set Presto Settings as shown in the following figure.

If the cluster type is Spark, Spark configuration is set in the Recommended Spark Configuration, which is in addition to Hadoop Cluster Settings as described in Configuring a Spark Cluster.

Advanced Configuration: Modifying Security Settings (AWS)¶

Under SECURITY SETTINGS:

Qubole Public Key cannot be changed. QDS uses this key to access the cluster and run commands on it.

Note

You can improve the security of a cluster by authorizing Qubole to generate a unique SSH key every time a cluster is started; create a support ticket to enable this capability. A unique SSH key is generated by default in https://in.qubole.com , https://us.qubole.com, and https://wellness.qubole.com environments.

Once the SSH key is enabled, QDS uses the unique SSH key to interact with the cluster. If you want use this capability to control QDS access to a Bastion node communicating with a cluster running on a private subnet, you can use the account-level key that you must authorize on the Bastion node.

To get the account-level key, use this API or navigate to the cluster’s Advanced Configuration, the account-level SSH key is displayed in EC2 Settings as described in Advanced configuration: Modifying EC2 Settings (AWS).

Setting Customer Public SSH Key is optional; you can use it to log in to QDS cluster nodes. If used, this must be the public key from an SSH public-private key pair, and you need to configure a Persistent Security Group that has a rule allowing SSH access from a set of IP addresses or security groups that you specify.

Persistent Security groups - Setting this for a cluster overrides the account-level persistent security group, if any. Use this option if you want to give additional access permissions to cluster nodes. Qubole only uses the security group name for validation. So, do not provide the security group’s ID. You must provide a persistent security group when you configure outbound communication from cluster nodes to pass through a Internet proxy server.

Select Enable encryption to encrypt data-at-rest on the node’s ephemeral (local) storage. This includes HDFS and any intermediate output generated by Hadoop. Block device encryption is set up before the node joins the cluster; this can increase the time the cluster takes to come up.

Advanced configuration: Modifying Cluster Monitoring Settings¶

Under MONITORING:

- Enable Ganglia Monitoring: Ganglia monitoring is enabled automatically if you use Datadog; otherwise it is disabled by default. For more information on Ganglia monitoring, see Performance Monitoring with Ganglia.

Modify Datadog settings

If you have configured Datadog under Account Settings, you can override those settings here for this particular cluster if you need to. Specify the API token (Datadog API key) and APP token (Datadog application key). When you configure Datadog settings, Ganglia monitoring is automatically enabled.

Note

Although Ganglia monitoring is enabled, its link may not be visible in the cluster’s UI resources list.

Notification Channel: Select a channel to configure receiving alerts and notifications related to your cluster. To know more about how to create a Notification Channel, see Creating Notification Channels.

Note

This feature is a part of Gradual Rollout.

Applying Your Changes¶

Under each tab on the Clusters page, there a right pane (Summary) from which you can:

- Review and edit your changes.

- Click Create to create a new cluster.

- Click Update to change an existing cluster’s configuration.

If you are not satisfied with your changes:

- Click Previous to go back the previous tab.

- Click Cancel to leave settings unchanged.

Modifying Cluster Settings for Azure¶

Under the Configuration tab, you can add or modify:

Cluster Labels: A cluster can have one or more labels separated by a commas. You can make a cluster the default cluster by including the label

default.Presto Version: For a Presto cluster, choose a supported version from the drop-down list.

Hive Version: For a Hive cluster, choose a supported version from the drop-down list.

Node types: Qubole recommends that you change the Coordinator and worker node types from the default (Standard_A5). D Series v2 instances are good choices; for example Standard_D4_v2 for the Coordinator node and Standard_D3_v2 for the worker nodes, or Standard_D12_v2 for the Coordinator node and Standard_D13_v2 for the worker nodes.

- Coordinator Node Type: Change the Coordinator node type from the default by selecting a different type from the drop-down list.

- Worker Node Type: Change the worker node type from the default by selecting a different type from the drop-down list.

Use Multiple Worker Node Types - (Hadoop 2 and Spark clusters) See Configuring Heterogeneous Worker Nodes in the QDS UI.

Minimum Worker Nodes: Enter the minimum number of worker nodes if you want to change it (the default is 1).

Maximum Worker Nodes: Enter the maximum number of worker nodes if you want to change it (the default is 1).

Node Bootstrap File: You can append the name of a node bootstrap script to the default path.

Disable Automatic Cluster Termination: Check this box if you do not want QDS to terminate idle clusters automatically. Qubole recommends that you leave this box unchecked.

Idle Cluster Timeout: Optionally specify how long (in hours) QDS should wait to terminate an idle cluster. The default is 2 hours; to change it, enter a number between 0 and 6. This will override the timeout set at the Configuring Advanced Settings for Azure level.

Note

See Aggressive Downscaling (Azure) for information about an additional set of capabilities that currently require a Qubole Support ticket.

Under the Advanced Configuration tab you can add or modify:

Same As Default Compute: Uncheck this to change your default Azure compute settings.

Resource Group: See Overriding the Azure Default Resource Group if you want to override the account-level default for this cluster.

Location: Click on the drop-down list to choose the Azure geographical location.

Managed Disk Type: This denotes the type of disk storage. Set it to Standard or Premium; OR set the Disk Storage Account.

Note

You can set either Managed Disk Type or Disk Storage Account Name, but not both.

Disk Storage Account Name: If you did not choose a Managed Disk Type, choose your Azure storage account. See also Configuring an Azure Cluster to Use Additional Storage Accounts.

Data Disk Count: Choose the number of reserved disk volumes to be mounted to each cluster node, if any. Reserved volumes provide additional storage reserved for HDFS and intermediate data such as Hadoop Mapper output.

Data Disk Size: Choose the size in gigabytes of each reserved volume.

Enable Disk Upscaling Check this box if you want QDS to add disks to any node whose local storage is running low. If you check the box, specify the following:

- Maximum Data Disk Count: The maximum number of disks that QDS can add to a node. Should be more than Data Disk Count, or disk upscaling will not occur, and cannot be more than 64.

- Free Space Threshold %: The minimum percentage of storage that must be available (free) on the node (defaults to 25%).

- Absolute Free Space Threshold: The absolute amount of storage that must be available (free), in gigabytes (defaults to 100 GB).

See Disk Upscaling on Hadoop MRv2 Azure Clusters for more information.

Coordinator Static IP Name: Optional. The name corresponding to a static public IP address which you can use to connect to the cluster Coordinator node. Configuring a static IP address allows you to use the same address each time you connect to this cluster.

Coordinator Static Network Interface Name: Optional. The name corresponding to a static network interface (configured in Azure) which you can use to connect to the cluster Coordinator node, using either a private or public IP address, or both. Configuring a static IP address allows you to use the same address each time you connect to this cluster.

Virtual Network: Select your virtual network (VNet).

Subnet Name: Choose the subnet.

Bastion Node: The public IP address of the Bastion node used to access private subnets, if any.

Network Security Group: Optionally choose a network security group from the drop-down menu. A network security group contains a list of security rules that permit or restrict network access to resources connected to Vnets. If you specify a network security group here, QDS adds all of its rules to the default security group it creates for the cluster.

Custom Tags: You can create tags to be applied to the Azure virtual machines.

Override Hadoop Configuration Variables: For a Hadoop (Hive) cluster, enter Hadoop variables here if you want to override the defaults (Recommended Configuration) that Qubole uses. See also Advanced Configuration: Modifying Hadoop Cluster Settings.

Override Presto Configuration: For a Presto cluster, enter Presto settings here; QDS does not provide a recommended configuration. You can also enable RubiX caching. See also Advanced Configuration: Modifying Hadoop Cluster Settings and Configuring a Presto Cluster.

Fair Scheduler Configuration: For a Hadoop or Spark cluster, enter Hadoop Fair Scheduler values if you want to override the defaults that Qubole uses.

Default Fair Scheduler Queue: Specify the default Fair Scheduler queue (used if no queue is specified when the job is submitted).

Override Spark Configuration: For a Spark cluster, enter Spark settings here if you want to override the defaults (Recommended Configuration) that Qubole uses. See also Advanced Configuration: Modifying Hadoop Cluster Settings.

Python and R Version: For a Spark cluster, choose the versions from the drop-down menu.

HIVE SETTINGS: See Configuring a HiveServer2 Cluster.

MONITORING: See Advanced configuration: Modifying Cluster Monitoring Settings.

Customer SSH Public Key: The public key from an SSH public-private key pair, used to log in to QDS cluster nodes. To use SSH, you must configure a network security group with a rule that allows access to the cluster Subnet via an ACL (Access Control List) or other means.

Qubole Public Key cannot be changed. QDS uses this key to gain access to the cluster and run commands on it.

Note

You can improve the security of a cluster by authorizing Qubole to generate a unique SSH key every time a cluster is started; create a ticket with Qubole Support to enable this capability. Once it is enabled, If you want use this capability to control QDS access to a Bastion node communicating with a cluster running on a private subnet, Qubole support will provide you with an account-level key that you must authorize on the Bastion host.

When you are satisfied with your changes, click Create or Update.

Configuring an Azure Cluster to Use Additional Storage Accounts¶

An Azure cluster running Hadoop (Hadoop 2 (Hive), Presto, and Spark clusters) can have access to more than one Azure storage account.

You configure this in the HADOOP CLUSTER SETTINGS section under the Advanced Configuration tab of the Clusters page in the QDS UI. Identify each additional account in the Override Hadoop Configuration Variables field in the following form:

fs.azure.account.key.<blob_name>.blob.core.windows.net=<access_key>

where access_key is the access key for this storage account.

Overriding the Azure Default Resource Group¶

When you configured your account, you specified the Azure resource group in which QDS brings up your clusters. This allowed QDS to create a default resource group.

You can override this default resource group when you configure a cluster. To do this, proceed as follows:

Navigate to the Clusters page in the QDS UI.

Open the Advanced Configuration tab.

Either:

- Choose a new resource group and location from the dropdown lists. The cluster will use the resource group you specify, with your existing account credentials;

Or:

- Enter new credentials, and choose a new resource group and location from the dropdown lists. The cluster will use the resource group you specify, with the new account credentials.

Configuring Azure Application Roles¶

When you created your initial configuration, you probably followed Qubole’s recommendation and assigned the Contributor role to the application you created to launch QDS clusters. If you find that this role does not meet your needs, you can do one of the following:

Instructions for each option follow.

Configuring a More Restrictive Azure Role for the QDS Application

If you decide you want to assign the application you created to a more restrictive role than Contributor, proceed as follows:

Create a custom role with the following actions:

[ "Microsoft.Authorization/roleDefinitions/read", "Microsoft.Compute/images/read", "Microsoft.Compute/operations/read", "Microsoft.Compute/disks/*", "Microsoft.Compute/snapshots/*", "Microsoft.Compute/virtualMachines/*", "Microsoft.Compute/locations/*/read", "Microsoft.Network/*/read", "Microsoft.Network/networkInterfaces/*", "Microsoft.Network/publicIPAddresses/*", "Microsoft.Network/networkSecurityGroups/*", "Microsoft.Network/virtualNetworks/subnets/join/action", "Microsoft.Network/routeTables/*", "Microsoft.Resources/subscriptions/resourcegroups/*", "Microsoft.Storage/storageAccounts/read", "Microsoft.Storage/storageAccounts/blobServices/*", "Microsoft.Storage/storageAccounts/listKeys/action", "Microsoft.DataLakeStore/*/read" ]Use the Azure portal and these Azure instructions to assign the application to the role you have just created.

Defining Custom Azure Roles for the QDS Application

You may find that neither the Contributor role, nor the more restrictive role defined above, fits your needs. For example, you may want to define and assign a role that provides access to a resource group containing storage or networking components, rather than the subscription as a whole.

Below are:

- Sample custom roles

- Sample commands for creating and assigning the roles

Note

To define and implement custom roles, you must have the appropriate Azure permissions, and you will need to have the Azure command-line interface (CLI) installed locally. For more information, see Create custom roles using Azure CLI.

Defining Custom Azure Roles: Sample Custom Roles

Assignable Scope: Subscription - restrict access to read-only subscription-wide.

"Microsoft.Resources/subscriptions/resourcegroups/read"Note

For this very restrictive role, you will need to:

- Create a ticket with Qubole Support to enable you to configure the role.

- Create the resource group as instructed by Qubole Support.

This applies to any setting more restrictive than

"Microsoft.Resources/subscriptions/resourcegroups/*"

Assignable Scope: resource group or subscription - can be applied to one or more resource groups within a subscription, or subscription-wide.

"Microsoft.Compute/operations/read" "Microsoft.Compute/disks/*" "Microsoft.Compute/snapshots/*" "Microsoft.Compute/virtualMachines/*" "Microsoft.Compute/locations/*/read" "Microsoft.Network/*/read" "Microsoft.Network/networkInterfaces/*" "Microsoft.Network/publicIPAddresses/*" "Microsoft.Network/networkSecurityGroups/*" "Microsoft.Network/virtualNetworks/subnets/join/action" "Microsoft.Network/routeTables/*"

Assignable Scope: resource group - for networking resources.

"Microsoft.Network/*/read" "Microsoft.Network/routeTables/*/read" "Microsoft.Network/virtualNetworks/subnets/join/action" "Microsoft.Network/networkSecurityGroups/*/read" "Microsoft.Network/networkSecurityGroups/join/action"

Assignable Scope: resource group - for storage resources that Qubole needs access to.

"Microsoft.Storage/storageAccounts/read" "Microsoft.Storage/storageAccounts/blobServices/*" "Microsoft.Storage/storageAccounts/listKeys/action"

Defining Custom Azure Roles: Sample Commands

Use the Azure command-line interface (CLI) to implement and apply these roles.

To define a role:

az role definition create --help --role-definition "<file>"

where <file> contains the role definition and must be in JSON format.

To assign a role:

az role assignment create --assignee <object-id-of-app> --resource-group <resource-group> --role <role>

where <resource-group> is the name of the resource group and <role> is role defined by the

role definition create command.

Modifying Cluster Settings for Oracle OCI¶

Under the Type tab, you can choose a Hadoop 2 or Spark Cluster.

Under the Configuration tab, you can modify:

- Cluster Labels: A cluster can have one or more labels separated by a commas. You can make a cluster the default cluster by including the label “default”.

- Coordinator Node Type: To change the Coordinator node type from the default, select a different type from the drop-down list.

- Worker Node Type: To change the worker node type from the default, select a different type from the drop-down list.

- Use Multiple Worker Node Types - (Hadoop 2 and Spark clusters) See Configuring Heterogeneous Worker Nodes in the QDS UI.

- Minimum Worker Nodes: Enter the minimum number of worker nodes if you want to change it (the default is 2).

- Maximum Worker Nodes: Enter the maximum number of worker nodes if you want to change it (the default is 2).

- Region: To change the region, select a different one from the drop-down list.

- Availability Zone: To change the Availability Zone, select a different one from the drop-down list.

- Node Bootstrap File: You can append the name of a node bootstrap script to the default path.

- Disable Automatic Cluster Termination: You can enable or disable automatic termination of an idle cluster. Qubole recommends leaving automatic termination enabled (that is, leave the box unchecked). Otherwise you may accidentally leave an idle cluster running and incur unnecessary and possibly large costs.

Under the Advanced Configuration tab, you can:

- Choose Same as Default Compute (check the box); or

- Modify the settings as described here.

You can also:

- Change the Compartment ID, VCN, and AD and Subnet Pair. These must meet the requirements described here.

- Provide the public IP address of a bastion node. See also this Oracle white paper.

- Add custom tags to the Oracle instances.

In the HADOOP CLUSTER SETTINGS section, you can modify:

Hadoop Configuration Variables: Enter Hadoop variables here if you want to override the defaults that Qubole uses.

Fair Scheduler Configuration: Enter Hadoop Fair Scheduler values if you want to override the defaults that Qubole uses.

Default Fair Scheduler Pool: Specify the default Fair Scheduler pool (used if no pool is specified when the job is submitted).

Note

In the Hadoop 2 implementation, pools are referred to as “queues”.

In the MONITORING section, you can choose to enable Ganglia monitoring (check the box).

In the SECURITY SETTINGS section, you can modify:

- Customer SSH Public Key: The public key from an SSH public-private key pair, used to log in to QDS cluster nodes.

Qubole Public Key cannot be changed. QDS uses this key to gain access to the cluster and run commands on it.

Note

You can improve the security of a cluster by authorizing Qubole to generate a unique SSH key every time a cluster is started. This is not enabled by default; create a ticket with Qubole Support to enable it. Once it is enabled, Qubole will use the unique SSH key to interact with the cluster. For clusters running in private subnets, QDS uses a unique SSH key for the Qubole account; the SSH key must be authorized on the Bastion host.

When you are satisfied with your changes, click Create or Update.

Modifying Cluster Settings for Oracle OCI Classic¶

Under the Type tab, you can choose a Hadoop 2 or Spark Cluster.

Under the Configuration tab, you can modify:

- Cluster Labels: A cluster can have one or more labels separated by a commas. You can make a cluster the default cluster by including the label “default”.

- Coordinator Node Type: To change the Coordinator node type from the default, select a different type from the drop-down list.

- Worker Node Type: To change the worker node type from the default, select a different type from the drop-down list.

- Minimum Worker Nodes: Enter the minimum number of worker nodes if you want to change it (the default is 2).

- Maximum Worker Nodes: Enter the maximum number of worker nodes if you want to change it (the default is 2).

- Data Disk Count: Optionally specify the number of data disks to be mounted to each node (the default is none).

- Data Disk Size: If you are adding data disks, specify the size of each disk, in GB (the default is 100).

- Node Bootstrap File: You can append the name of a node bootstrap script to the default path.

- Disable Automatic Cluster Termination: You can enable or disable automatic termination of an idle cluster. Qubole recommends leaving automatic termination enabled (that is, leave the box unchecked). Otherwise you may accidentally leave an idle cluster running and incur unnecessary and possibly large costs.

Under the Advanced Configuration tab, you can:

- Choose Same as Default Compute (check the box); or

- Modify the settings as described here.

You can also optionally specify a private subnet and ACL for this cluster.

In the HADOOP CLUSTER SETTINGS section, you can modify:

- Hadoop Configuration Variables: Enter Hadoop variables here if you want to override the defaults that Qubole uses.

- Fair Scheduler Configuration: Enter Hadoop Fair Scheduler values if you want to override the defaults that Qubole uses.

- Default Fair Scheduler Queue: Specify the default Fair Scheduler queue (used if no queue is specified when the job is submitted).

In the MONITORING section, you can choose to:

- Enable Ganglia monitoring (check the box).

- Override your account-level Datadog settings, if any: provide the API and App tokens to be used by this particular cluster.

In the SECURITY SETTINGS section, you can modify:

- Customer SSH Public Key: The public key from an SSH public-private key pair, used to log in to QDS cluster nodes.

When you are satisfied with your changes, click Save.