MapReduce Configuration in Hadoop 2

Qubole’s Hadoop 2 offering is based on Apache Hadoop 2.6.0. Qubole has some optimizations in the cloud object storage access and has enhanced it with its autoscaling code. Qubole jars have been uploaded in a maven repository and can be accessed seamlessly for developing mapreduce/yarn applications as highlighted by this POM file.

In Hadoop 2, Resource Manager and ApplicationMaster handle tasks and assign them to nodes in the cluster. Map and Reduce slots are replaced by containers.

In Hadoop 2, slots have been replaced by containers, which is an abstracted part of the worker resources. A container can be of any size within the limit of the Node Manager (worker node). The map and reduce tasks are Java Virtual Machines (JVMs) launched within these containers.

This change means that specifying the container sizes become important. For example, a memory-heavy map task, would require a larger container than a lighter map task. Moreover, the container sizes are different for different instance types (for example, an instance with larger memory has larger container size). While Qubole specifies good default parameters for the container sizes per instances, there are certain cases when you would like to change the defaults.

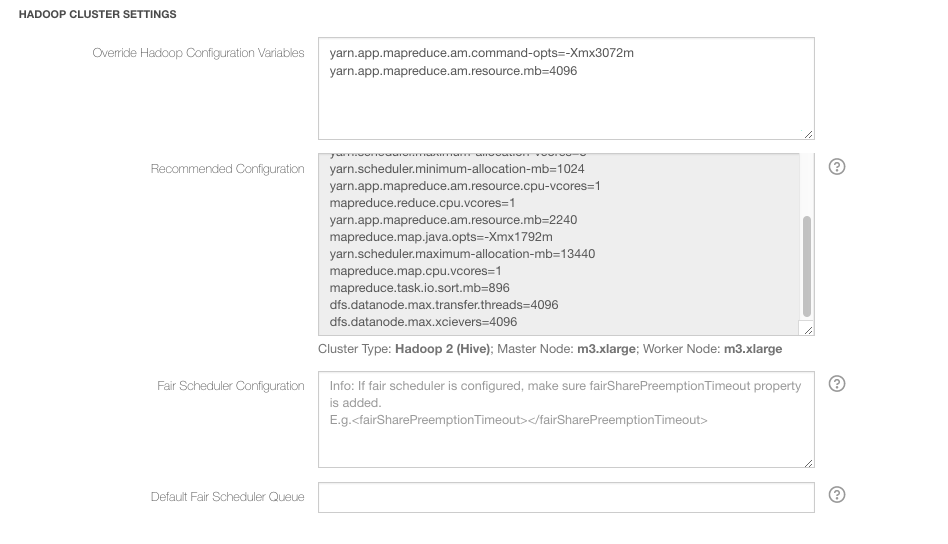

The default Hadoop 2 settings for a cluster is shown in the Edit Cluster page of a Hadoop 2 (Hive) cluster. (Navigate to Clusters and click the edit button that is against a Hadoop 2 (Hive) cluster. See Managing Clusters for more information.) A sample is as shown in the following figure.

In MapReduce, changing a task’s memory requirement requires changing the following parameters:

The size of the container in which the map/reduce task is launched.

Specifying the maximum memory (-Xmx) to the JVM of the map/reduce task.

The two parameters (mentioned above) are changed for MapReduce Tasks/Application Master as shown below:

Map Tasks:

mapreduce.map.memory.mb=2240 # Container size

mapreduce.map.java.opts=-Xmx2016m # JVM arguments for a Map task

Reduce Tasks:

mapreduce.reduce.memory.mb=2240 # Container size

mapreduce.reduce.java.opts=-Xmx2016m # JVM arguments for a Reduce task

MapReduce Application Master:

yarn.app.mapreduce.am.resource.mb=2240 # Container size

yarn.app.mapreduce.am.command-opts=-Xmx2016m # JVM arguments for an Application Master

Locating Logs

The YARN logs (Application Master and container logs) are stored at:

<scheme><defloc>/logs/hadoop/<cluster_id>/<cluster_inst_id>/app-logs.The daemon logs for each host are stored at:

<scheme><defloc>/logs/hadoop/<cluster_id>/<cluster_inst_id>/<host>.The MapReduce Job History files are stored at:

<scheme><defloc>/logs/hadoop/<cluster_id>/<cluster_inst_id>/mr-history.

Where:

scheme is the cloud-specific URI scheme:

s3://for AWS;wasb://,adl://, orabfs[s]://for Azure;oci://for Oracle OCI.defloc is the default storage location for the QDS account.

cluster_id is the cluster ID as shown on the Clusters page of the QDS UI.

cluster_inst_id is the cluster instance ID. It is the latest folder under

<scheme><defloc>/logs/hadoop/<cluster_id>/for a running cluster or the last-terminated cluster.

To extract a container’s log files, create a YARN command line similar to the following:

yarn logs \

-applicationId application_<application ID> \

-logsDir <scheme><qubolelogs-location>/logs/hadoop/<clusterid>/<cluster-instanceID>/app-logs \

-appOwner <application owner>