Understanding the Qubole-Snowflake Integration Workflows

The Qubole-Snowflake integration provides the following workflows that you can execute by using QDS.

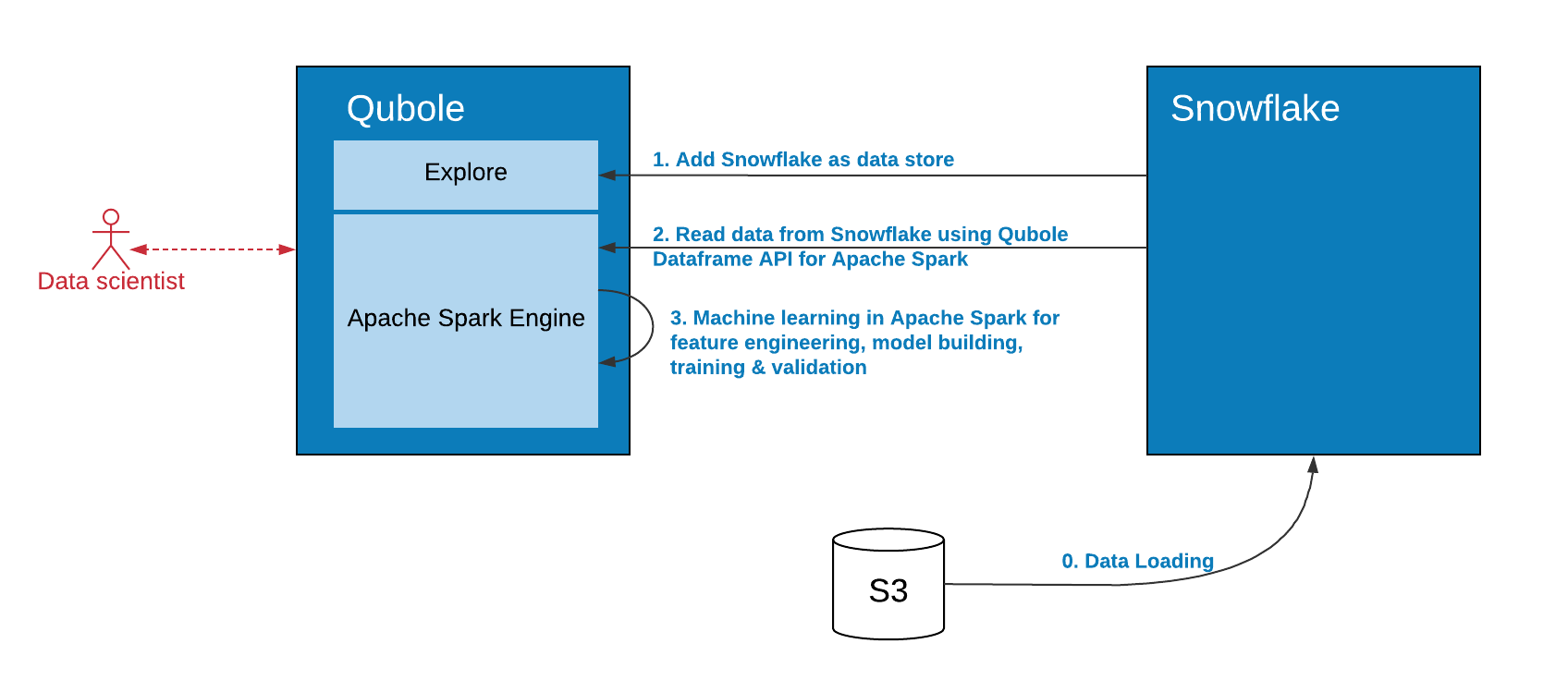

Advanced Data Preparation on Snowflake Data only

This workflow involves the following tasks:

Adding the Snowflake data warehouse as a data store by using QDS.

Running the Qubole Dataframe API for Apache Spark to read data from the Snowflake data store from any of the QDS interfaces, such as, QDS Analyze page, notebooks, or API.

Running a Spark command on QDS to prepare data in Apache Spark for data cleansing, blending, and transformation.

Running the Qubole Dataframe API for Apache Spark from QDS to write results back to the Snowflake data store.

The following diagram is the graphical representation of the workflow.

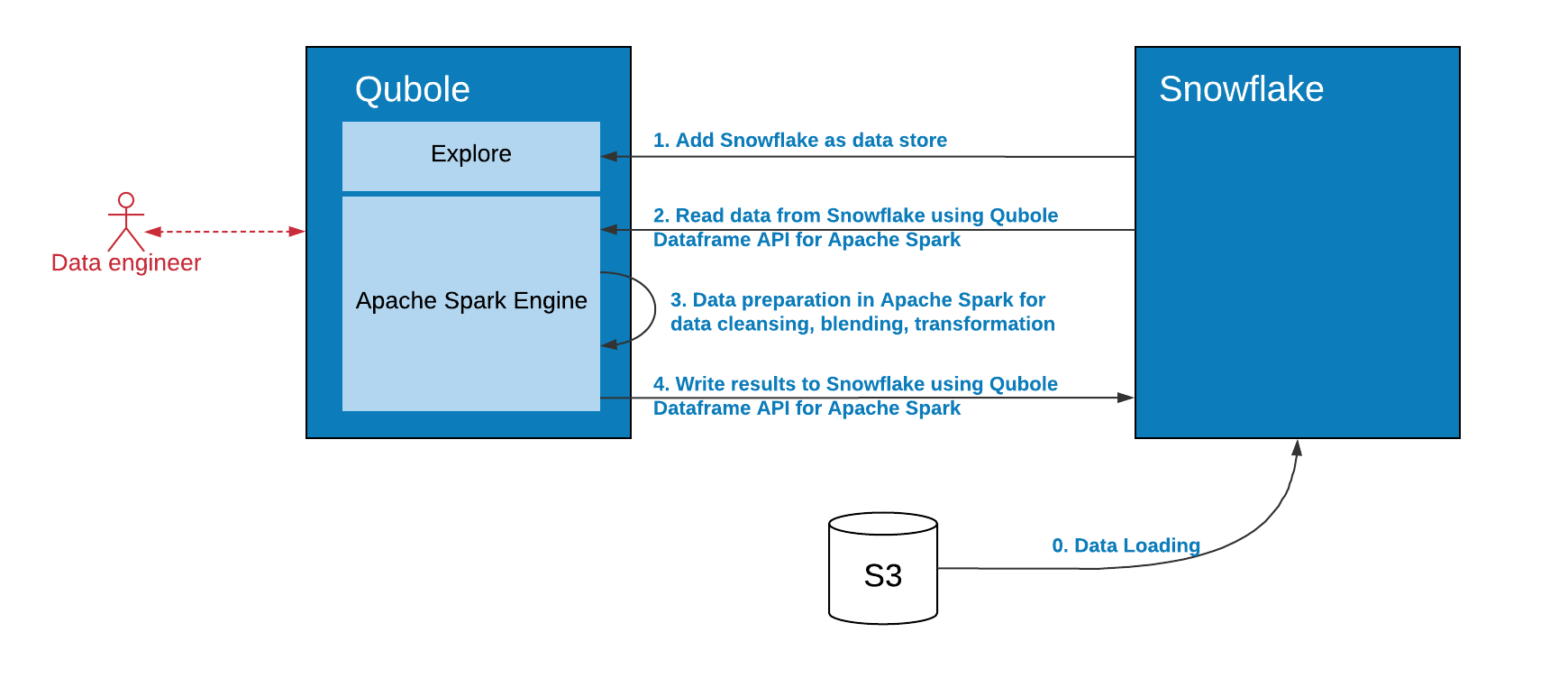

Advanced Data Preparation on Snowflake and Other Data Sources (AWS)

This workflow involves the following tasks:

Setting up Hive metastore with existing data in Amazon S3.

Adding the Snowflake data warehouse as a data store by using QDS.

Running a Spark command to prepare data in S3 for analytics using Apache Spark. Perform data cleansing, blending and transformation.

Running the Qubole Dataframe API for Apache Spark from QDS to write results to the Snowflake data store.

The following diagram is the graphical representation of the workflow.

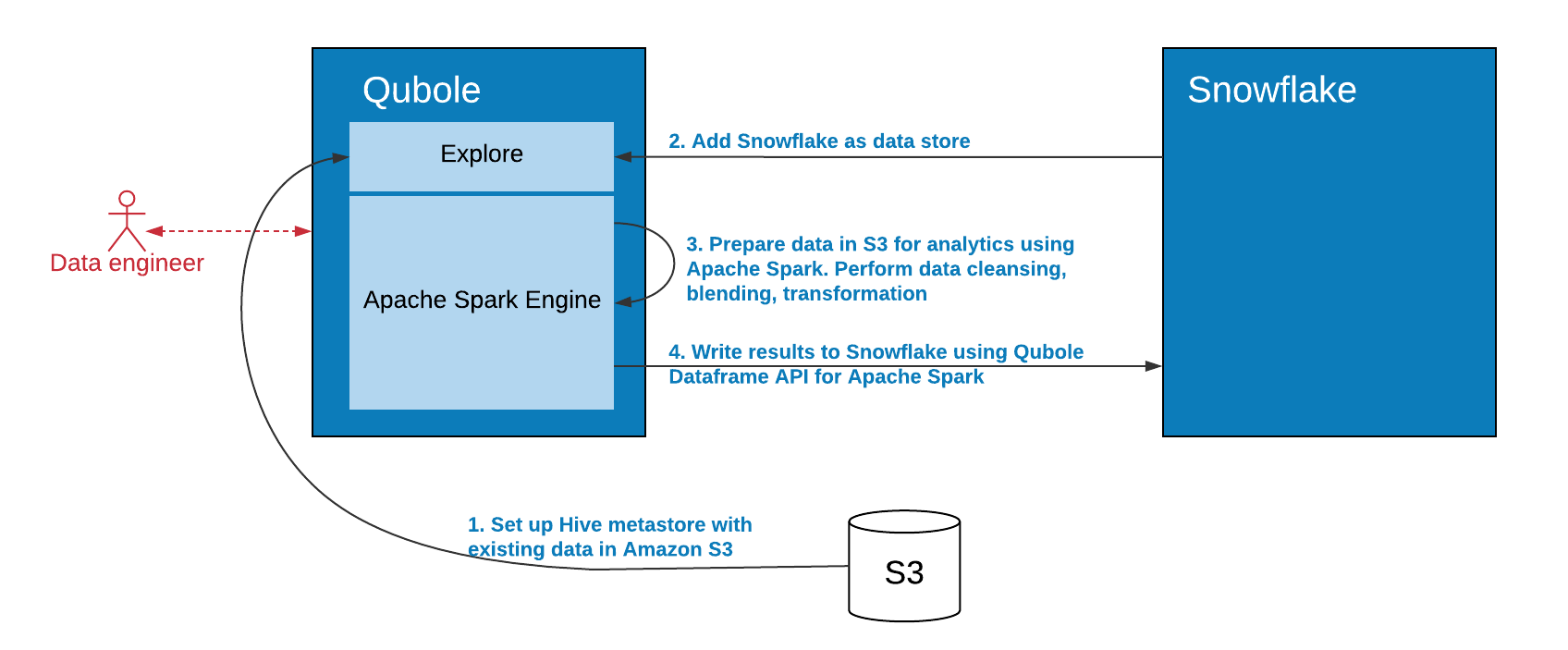

AI and Machine Learning (ML) on Snowflake Data

This workflow involves the following tasks:

Adding the Snowflake data warehouse as a data store by using QDS.

Running the Qubole Dataframe API for Apache Spark to read data from the Snowflake data store from any of the QDS interfaces, such as, QDS Analyze page, notebooks, or API.

Running a Spark command on QDS for Machine Learning in Apache Spark for feature engineering, model building, training, and validation.

The following diagram is the graphical representation of the workflow.