Composing Spark Commands in the Analyze Page

Use the command composer on the Analyze page to compose a Spark command in different languages.

See Running Spark Applications and Spark in Qubole for more information. For information about using the REST API , see Submit a Spark Command.

Spark queries run on Spark clusters. See Mapping of Cluster and Command Types for more information.

Qubole Spark Parameters

The Qubole parameter

spark.sql.qubole.parquet.cacheMetadataallows you to turn caching on or off for Parquet table data. Caching is on by default; Qubole caches data to prevent table-data-access query failures in case of any change in the table’s Cloud storage location. If you want to disable caching of Parquet table data, setspark.sql.qubole.parquet.cacheMetadatatofalse. You can do this at the Spark cluster or job level, or in a Spark Notebook interpreter.

In case of DirectFileOutputCommitter (DFOC) with Spark, if a task fails after writing files partially, the subsequent reattempts might fail with FileAlreadyExistsException (because of the partial files that are left behind). Therefore, the job fails. You can set the

spark.hadoop.mapreduce.output.textoutputformat.overwriteandspark.qubole.outputformat.overwriteFileInWriteflags totrueto prevent such job failures.

Ways to Compose and Run Spark Applications

You can compose a Spark application using:

Command Line. See Compose a Spark Application using the Command Line.

Python. See Compose a Spark Application in Python.

Scala. See Compose a Spark Application in Scala.

SQL. See Compose a Spark Application in SQL.

Notebook. See Run a Spark Notebook from the Analyze Query Composer. However, you can only run a notebook; you cannot compose a notebook paragraph.

Note

You can read a Spark job’s logs, even after the cluster on which it was run has terminated, by means of the offline Spark History Server (SHS). For offline Spark clusters, only event log files that are less than 400 MB are processed in the SHS. This prevents high CPU utilization on the webapp node. For more information, see this blog.

Note

You can use the --packages option to add a list of comma-separated Maven coordinates for external packages

that are used by a Spark application composed in any supported language. For example, in the

Spark Submit Command Line Options text field, enter --packages com.package.module_2.10:1.2.3.

Note

You can use macros in script files for Spark commands with subtypes scala (Scala), py (Python), R (R), sh (Command), and sql (SQL). You can also use macros in large inline content and large script files for scala (Scala), py (Python), R (R), @ and sql (SQL). This capability is not enabled for all users by default; create a ticket with Qubole Support

to enable it for your QDS account.

About Using Python 2.7 in Spark Jobs

If your cluster is running Python 2.6, you can enable Python 2.7 for a Spark job as follows:

Add the following configuration in the node bootstrap script (node_bootstrap.sh) of the Spark cluster:

source /usr/lib/hustler/bin/qubole-bash-lib.sh qubole-hadoop-use-python2.7

To run spark-shell/spark-submit on any node’s shell, run these two commands by adding them in the Spark Submit Command Line Options text field before running spark-shell/spark-submit:

source /usr/lib/hustler/bin/qubole-bash-lib.sh qubole-hadoop-use-python2.7

Note

Using the Supported Keyboard Shortcuts in Analyze describes the supported keyboard shortcuts.

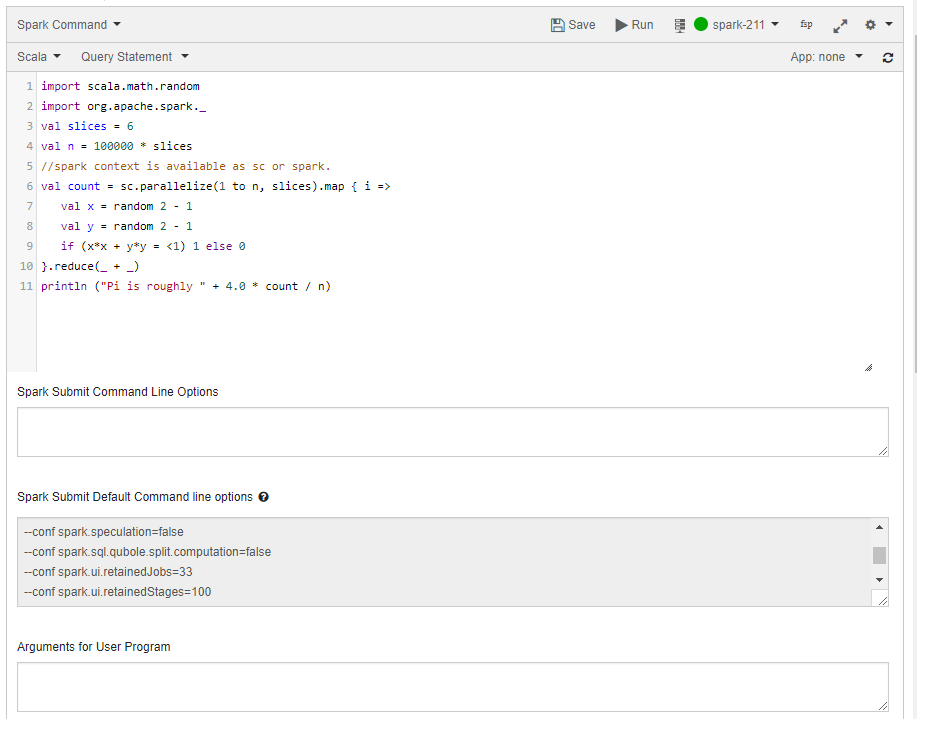

Compose a Spark Application in Scala

Perform the following steps to compose a Spark command:

Navigate to the Analyze page and click Compose. Select Spark Command from the Command Type drop-down list.

By default, Scala is selected. Compose the Spark application in Scala in the query editor. The query composer with Spark Command as the command type is as shown in the following figure.

Optionally enter command options in the Spark Submit Command Line Options text field to override the default command options in the Spark Default Submit Command Line Options text field.

Optionally specify arguments in the Arguments for User Program.

Click Run to execute the query. Click Save if you want to re-run the same query later. (See Workspace for more information on saving queries.)

The query result is displayed in the Results tab, and the query logs in the Logs tab. The Logs tab has a Errors and Warnings filter. For more information on how to download command results and logs, see Downloading Results and Logs. Note the clickable Spark Application UI URL in the Resources tab.

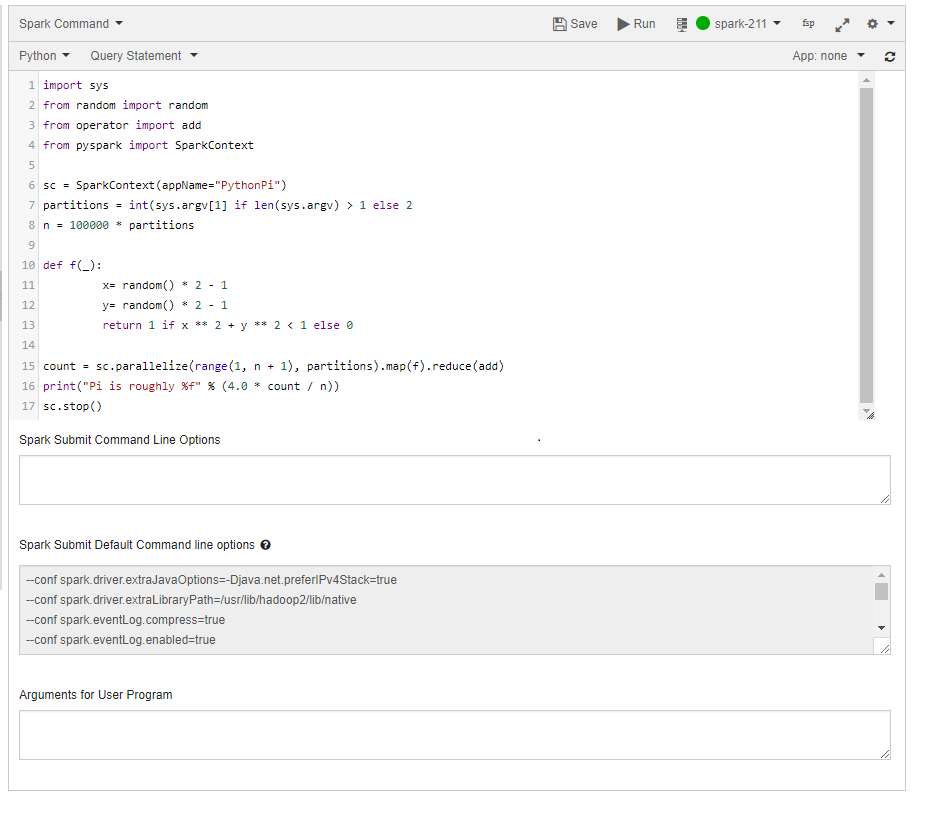

Compose a Spark Application in Python

Perform the following steps to compose a Spark command:

Navigate to the Analyze page and click Compose. Select Spark Command from the Command Type drop-down list.

By default, Scala is selected. Select Python from the drop-down list. Compose the Spark application in Python in the the query editor. The query composer with Spark Command as the command type is as shown in the following figure.

Optionally enter command options in the Spark Submit Command Line Options text field to override the default command options in the Spark Default Submit Command Line Options text field.

You can pass remote files in a Cloud storage location, in addition to the local files, as values to the

--py-filesargument.Optionally specify arguments in the Arguments for User Program.

Click Run to execute the query. Click Save if you want to run the same query later. (See Workspace for more information on saving queries.)

The query result is displayed in the Results tab, and the query logs in the Logs tab. The Logs tab has an Errors and Warnings filter. For more information on how to download command results and logs, see Downloading Results and Logs. Note the clickable Spark Application UI URL in the Resources tab.

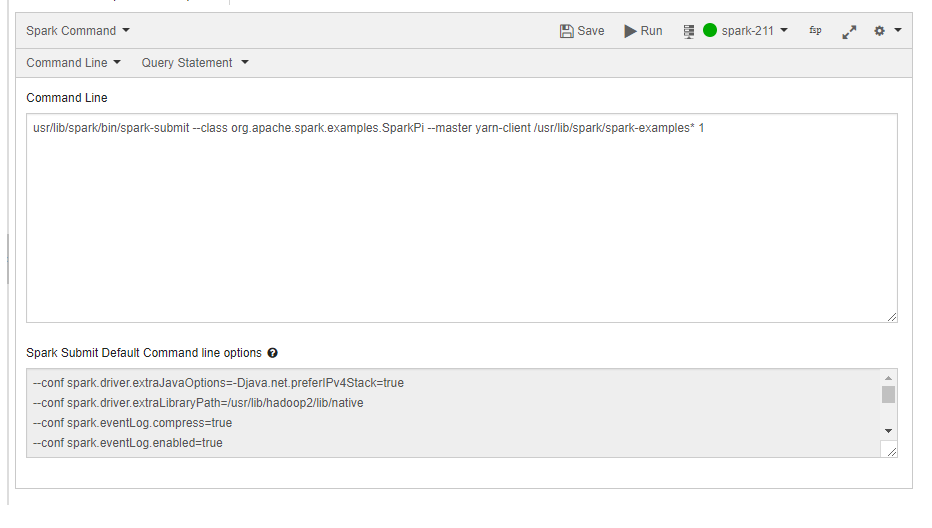

Compose a Spark Application using the Command Line

Note

Qubole does not recommend using the Shell command option to run a Spark application via Bash shell commands, because in this case automatic changes (such as increases in the Application Coordinator memory based on the driver memory, and the availability of debug options) do not occur. Such automatic changes do occur when you run a Spark application using the Command Line option.

Perform the following steps to compose a Spark command:

Navigate to the Analyze page and click Compose. Select Spark Command from the Command Type drop-down list.

By default, Scala is selected. Select Command Line from the drop-down list. Compose the Spark application using command-line commands in the the query editor. You can override default command options in the Spark Default Submit Command Line Options text field by specifying other options.

Note

Command Line supports

*.cmdlineor*.command_linefiles.The query composer with Spark Command as the command type is as shown in the following figure.

Click Run to execute the query. Click Save if you want to run the same query later. (See Workspace for more information on saving queries.)

The query result is displayed in the Results tab, and the query logs in the Logs tab. The Logs tab has an Errors and Warnings filter. For more information on how to download command results and logs, see Downloading Results and Logs. Note the clickable Spark Application UI URL in the Resources tab.

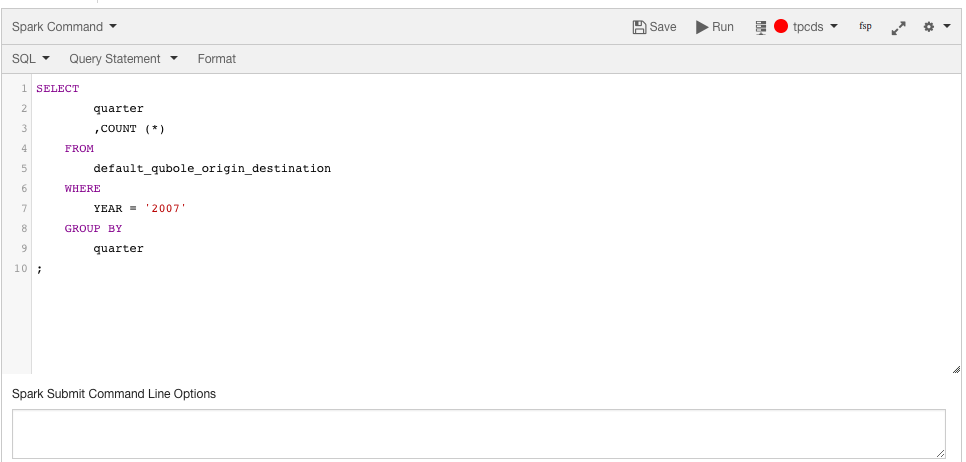

Compose a Spark Application in SQL

Note

You can run Spark commands in SQL with Hive Metastore 2.1. This capability is not enabled for all users by default; create a ticket with Qubole Support to enable it for your QDS account.

Note

You can run Spark SQL commands with large script files and large inline content. This capability is not enabled for all users by default; create a ticket with Qubole Support to enable it for your QDS account.

Navigate to the Analyze page and click Compose. Select Spark Command from the Command Type drop-down list.

By default, Scala is selected. Select SQL from the drop-down list. Compose the Spark application in SQL in the the query editor. Press Ctrl + Space in the command editor to get a list of suggestions.

The query composer with Spark Command as the command type is as shown in the following figure.

Optionally enter command options in the Spark Submit Command Line Options text field to override the default command options in the Spark Default Submit Command Line Options text field.

Click Run to execute the query. Click Save if you want to run the same query later. (See Workspace for more information on saving queries.)

The query result appers under the Results tab, and the query logs under the Logs tab. The Logs tab has an Errors and Warnings filter. For more information on how to download command results and logs, see Downloading Results and Logs. Note the clickable Spark Application UI URL in the Resources tab.

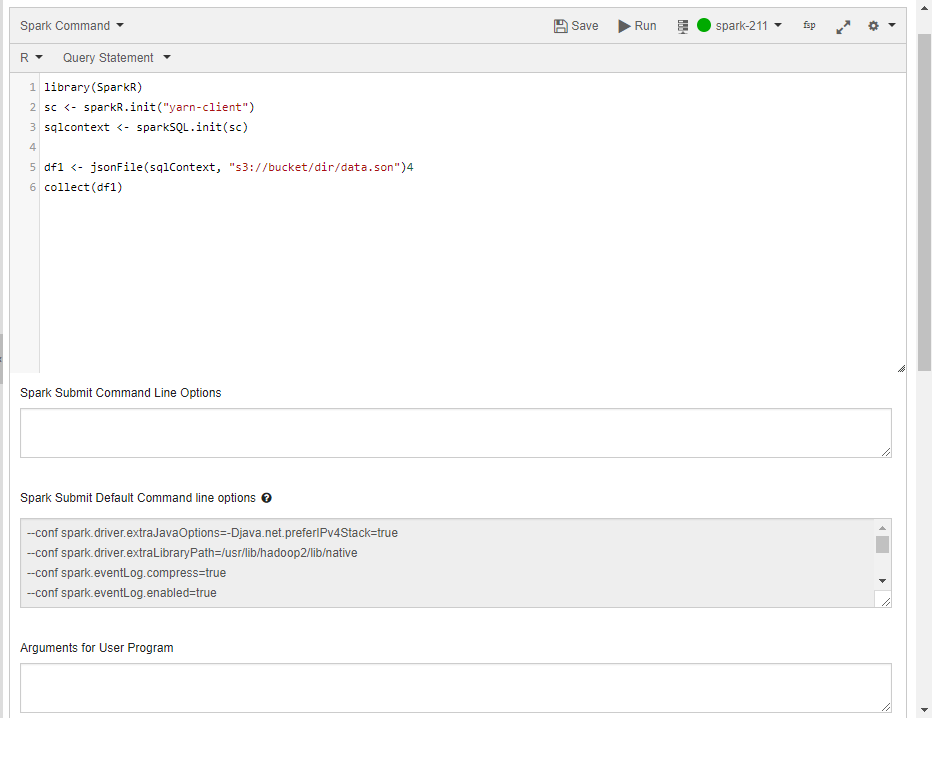

Compose a Spark Application in R

Perform the following steps to compose a Spark command:

Navigate to the Analyze page and click Compose. Select Spark Command from the Command Type drop-down list.

By default, Scala is selected. Select R from the drop-down list. Compose the Spark application in R in the query editor. The query composer with Spark Command as the command type is as shown in the following figure.

The example in the above figure uses Amazon S3 (s3://). For Azure, use wasb:// or adl:// or abfs[s], and for Oracle OCI, use oci://.

Optionally enter command options in the Spark Submit Command Line Options text field to override the default command options in the Spark Default Submit Command Line Options text field.

Optionally specify arguments in the Arguments for User Program.

Click Run to execute the query. Click Save if you want to run the same query later. (See Workspace for more information on saving queries.)

The query result is displayed in the Results tab, and the query logs in the Logs tab. The Logs tab has an Errors and Warnings filter. For more information on how to download command results and logs, see Downloading Results and Logs. Note the clickable Spark Application UI URL in the Resources tab.

Run a Spark Notebook from the Analyze Query Composer

Qubole supports running a Spark notebook from the Analyze page’s query composer. To run a notebook using the Analyze page, perform the following steps:

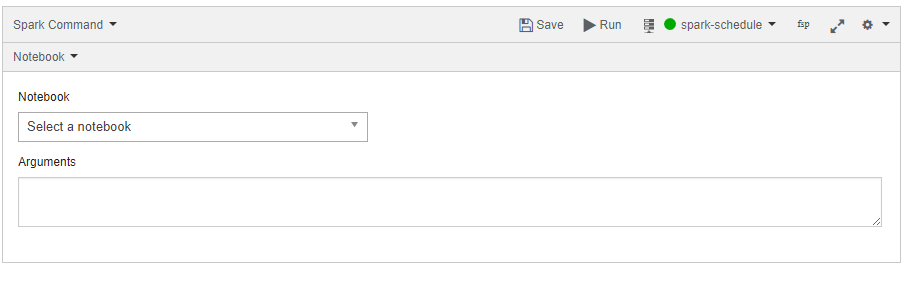

Navigate to the Analyze page and click Compose. Select Spark Command from the Command Type drop-down list.

By default, Scala is selected. Select Notebook from the drop-down list. The query composer with Spark Command as the command type is as shown in the following figure.

Select the Note from the Notebook’s drop-down list. You can select any cluster from the cluster drop-down list. The cluster associated with the selected notebook is also part of the cluster drop-down list.

In the Arguments text field, you can optionally add parameters to the notebook command. These parameters are passed to dynamic forms of the notebook. You can pass more than one variable. The syntax for using arguments is given below.

{"key1":"value1", "key2":"value2", ..., "keyN":"valueN"}

Where key1, key2, … keyN are the parameters that you want to pass before you run the notebook. If you need to change the corresponding values (value1, value2,…, valueN), you can do so each time you run command.

Click Run to execute the query. Click Save if you want to run the same query later. (See Workspace Tab for more information on saving queries.)

Note

If your cluster is running Zeppelin 0.8 or a later version, then all the paragraphs of the notebook are run sequentially.

The query result is displayed in the Results tab, and the query logs in the Logs tab. The Logs tab has an Errors and Warnings filter. For more information on how to download command results and logs, see Downloading Results and Logs. Note the clickable Spark Application UI URL in the Resources tab.

Known Issue

The Spark Application UI might display an incorrect state of the application when Spot Instances are used. You can view the accurate status of the Qubole command in the Analyze or Notebooks page.

When the Spark application is running, if the coordinator node or the node that runs driver is lost, then the Spark Application UI might display an incorrect state of the application. The event logs are persisted to cloud storage from the HDFS location periodically for a running application. If the coordinator node is removed due to spot loss, then the cloud storage might not have the latest status of the application. As a result, the Spark Application UI might show the application in running state.

<<<<<<< HEAD To avoid this issue, it is recommended to use an On-Demand coordinator node. ======= To avoid this issue, it is recommended to use an on-demand coordinator node. >>>>>>> e5d54c101b3864a40f224d9b0e95fdac0aa6b475

Other Examples

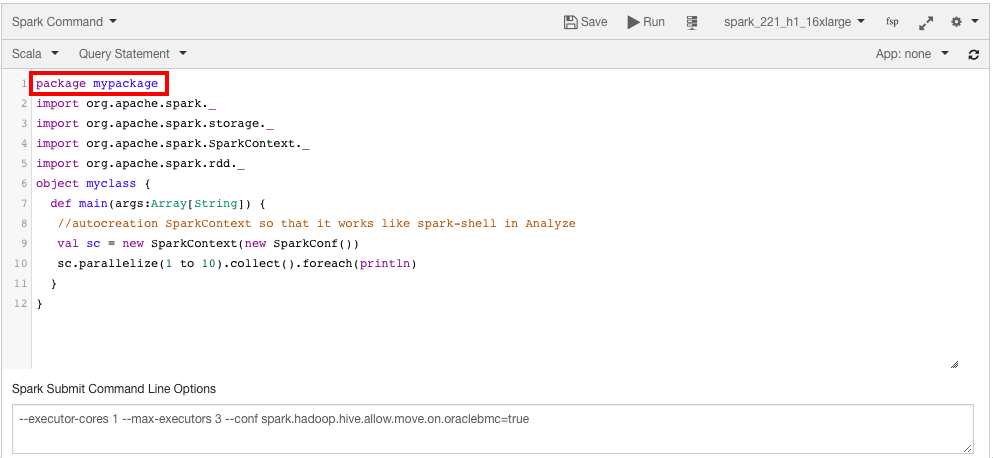

Example 1: Sample Scala program with the package parameter

The following figure shows a sample Scala program with the package mypackage parameter.

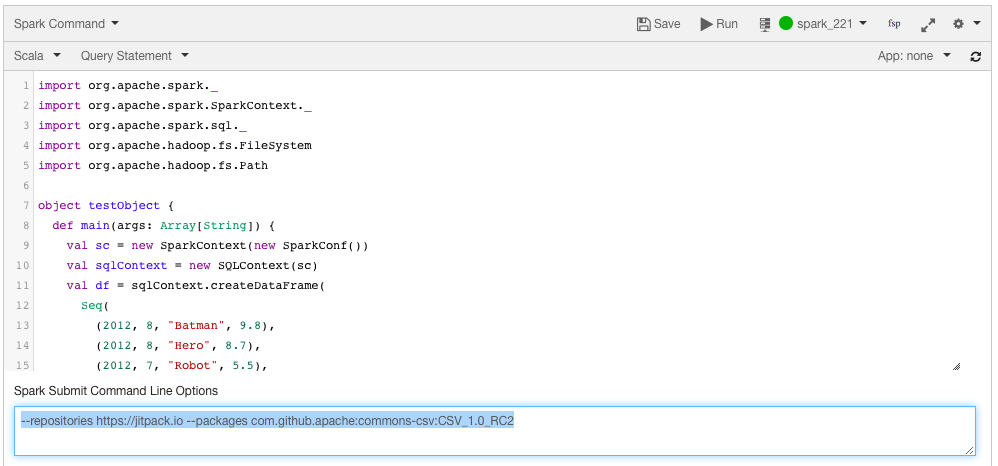

Example 2: Sample Scala program with the --repositories parameter

In this example, the spark-avro package is downloaded from the Jitpack maven repository and is made available to the spark program.

The following figure shows a sample Scala program with the --repositories https://jitpack.io --packages com.github.apache:commons-csv:CSV_1.0_RC2 parameter in the Spark Submit Command Line Options text field.

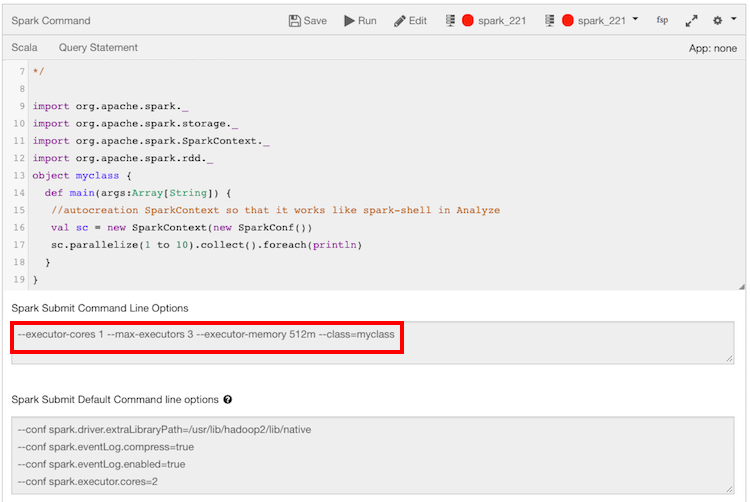

Example 3: Sample Scala program with the --class parameter

The following figure shows a sample Scala program with the --class=myclass parameter in the Spark Submit Command Line Options text field.

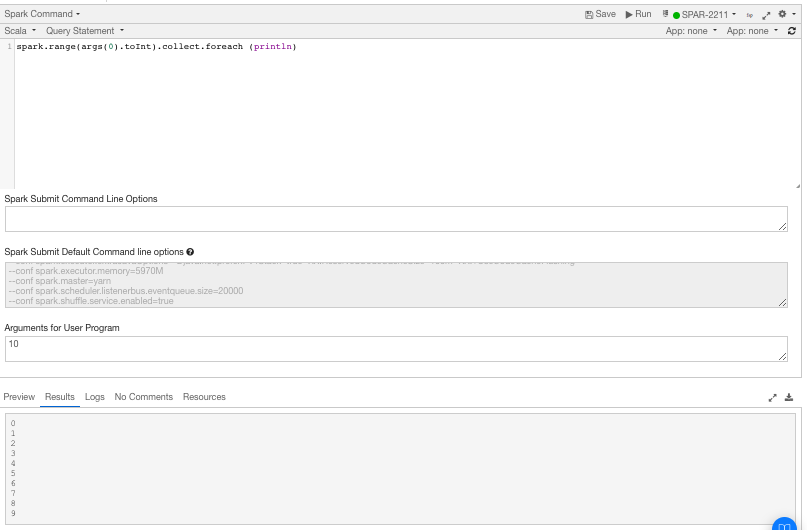

Example 4: Sample Scala program with user program arguments

The following figure shows a sample Scala program with the user argument args(0), value 10, and the respective result.